By TechTalks -

2021-01-04

By TechTalks -

2021-01-04

Semi-supervised learning helps you solve classification problems when you don't have labeled data to train your machine learning model.

By KDnuggets -

2020-12-15

By KDnuggets -

2020-12-15

An extensive overview of Active Learning, with an explanation into how it works and can assist with data labeling, as well as its performance and potential limitations.

By IBM Research Blog -

2021-01-26

By IBM Research Blog -

2021-01-26

Teams from IBM labs in Haifa and Dublin have developed software to help assess privacy risk of AI and reduce the amount of personal data in AI training.

By OpenAI -

2021-01-05

By OpenAI -

2021-01-05

We’re introducing a neural network called CLIP which efficiently learns visual concepts from natural language supervision.

By The Gradient -

2020-11-21

By The Gradient -

2020-11-21

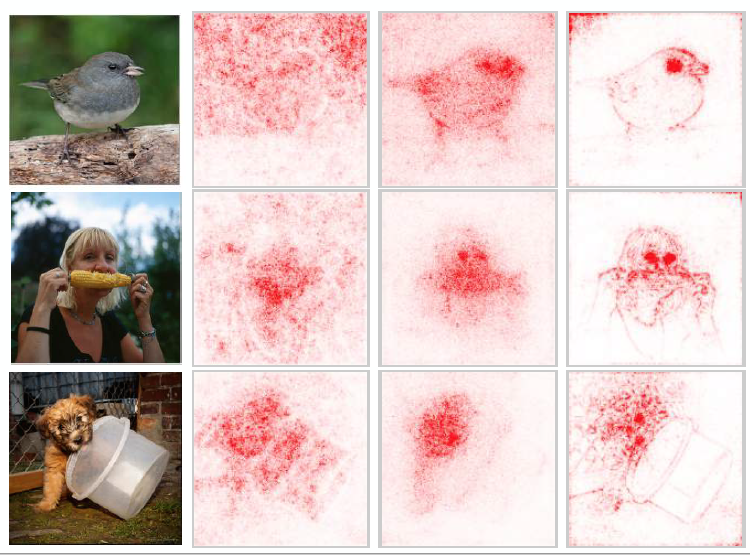

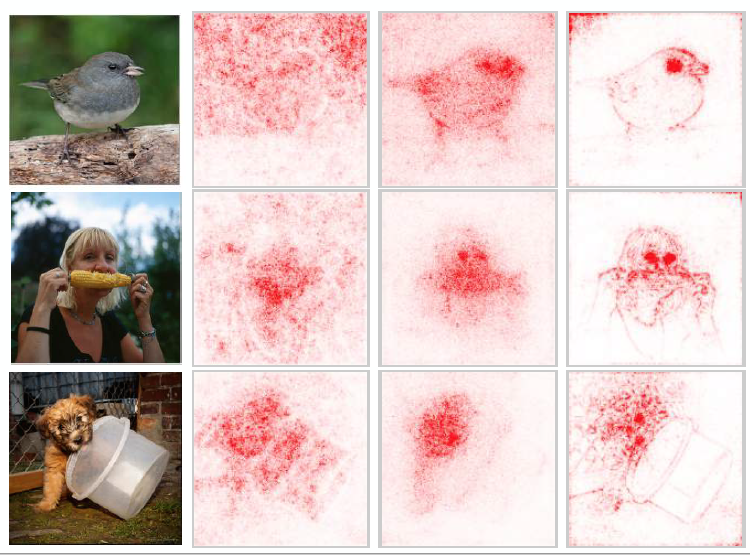

A broad overview of the sub-field of machine learning interpretability; conceptual frameworks, existing research, and future directions.

By Medium -

2020-07-25

By Medium -

2020-07-25

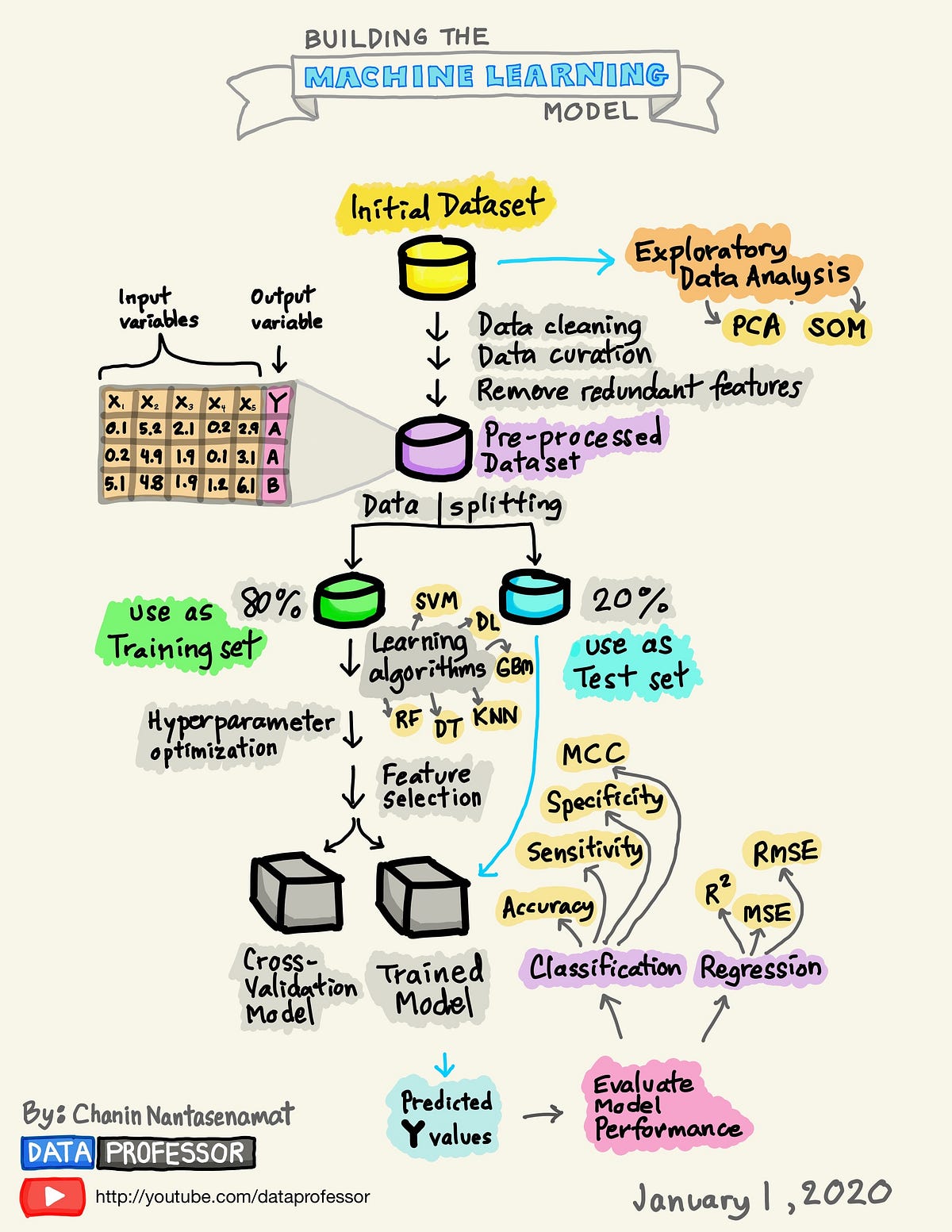

A Visual Guide to Learning Data Science