By ASAPP -

2021-02-24

By ASAPP -

2021-02-24

Highly expressive and efficient neural models can be designed using SRU++ with little attention computation needed.

By KDnuggets -

2021-02-09

By KDnuggets -

2021-02-09

Attention is a powerful mechanism developed to enhance the performance of the Encoder-Decoder architecture on neural network-based machine translation tasks. Learn more about how this process works an ...

By GitHub -

2020-10-05

By GitHub -

2020-10-05

Implementation of Vision Transformer, a simple way to achieve SOTA in vision classification with only a single transformer encoder, in Pytorch - lucidrains/vit-pytorch

By Medium -

2021-02-22

By Medium -

2021-02-22

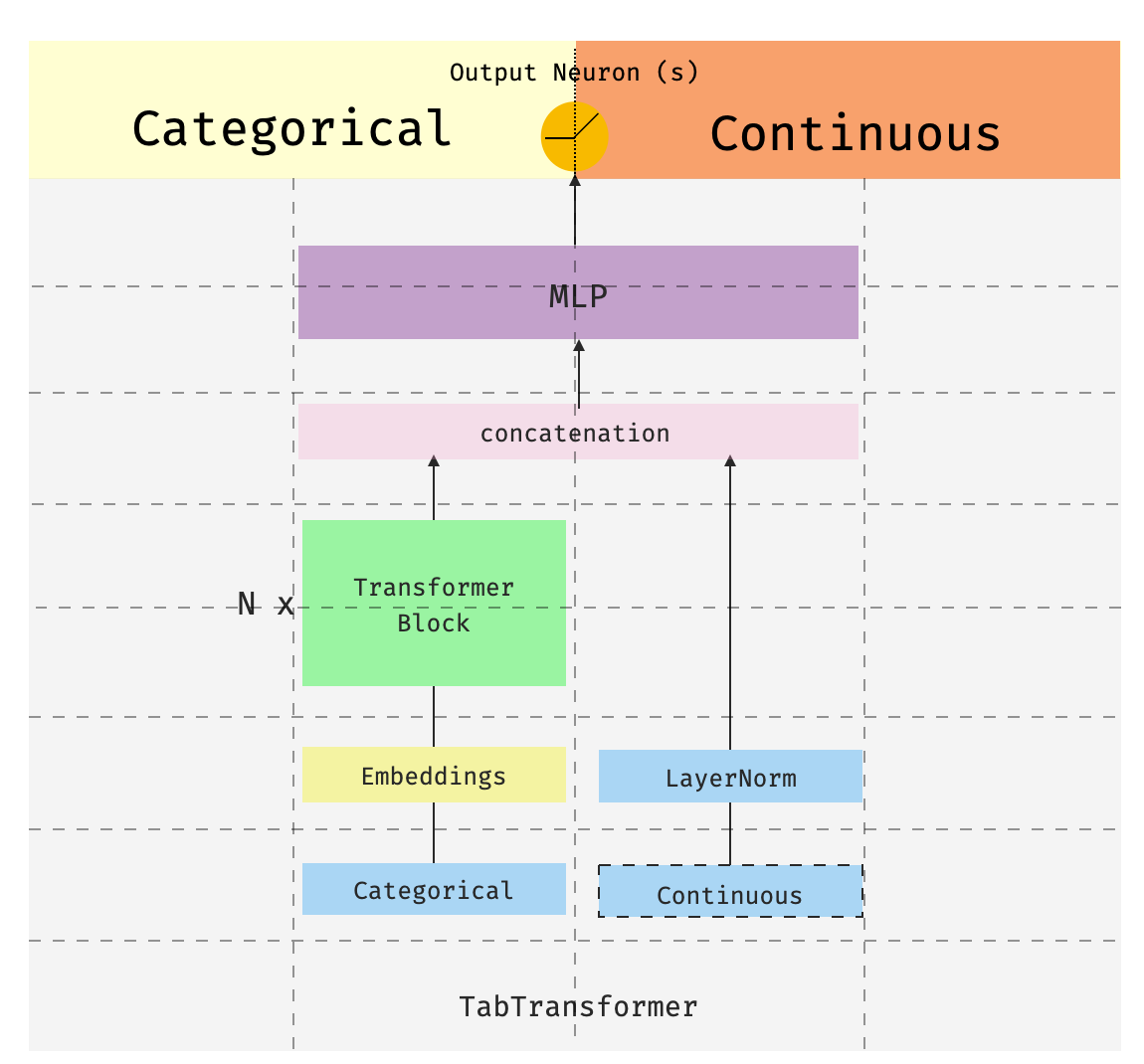

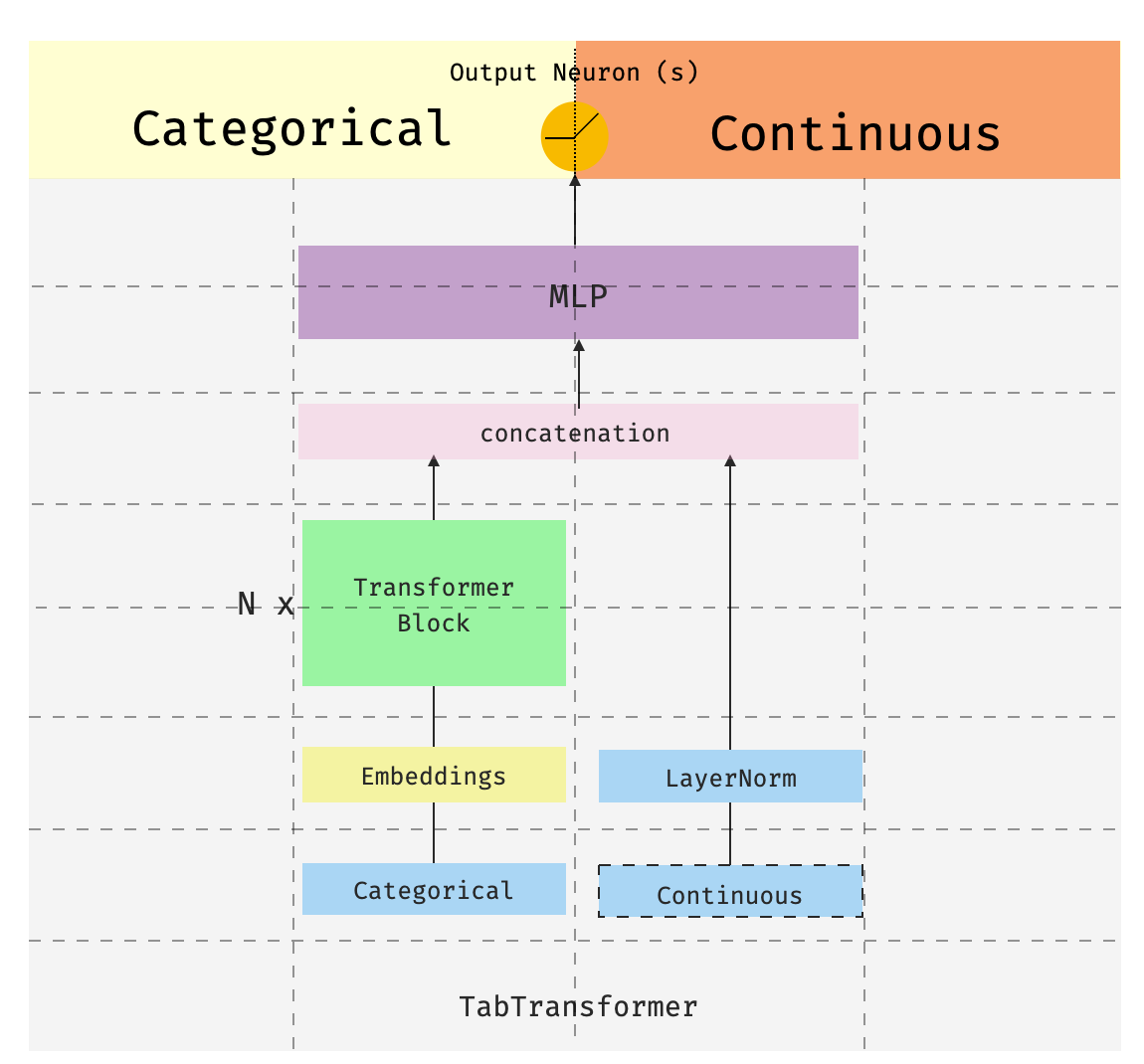

This is the third of a series of posts introducing pytorch-widedeepa flexible package to combine tabular data with text and images (that could also be used for “standard” tabular data alone). The…

By MachineCurve -

2021-02-15

By MachineCurve -

2021-02-15

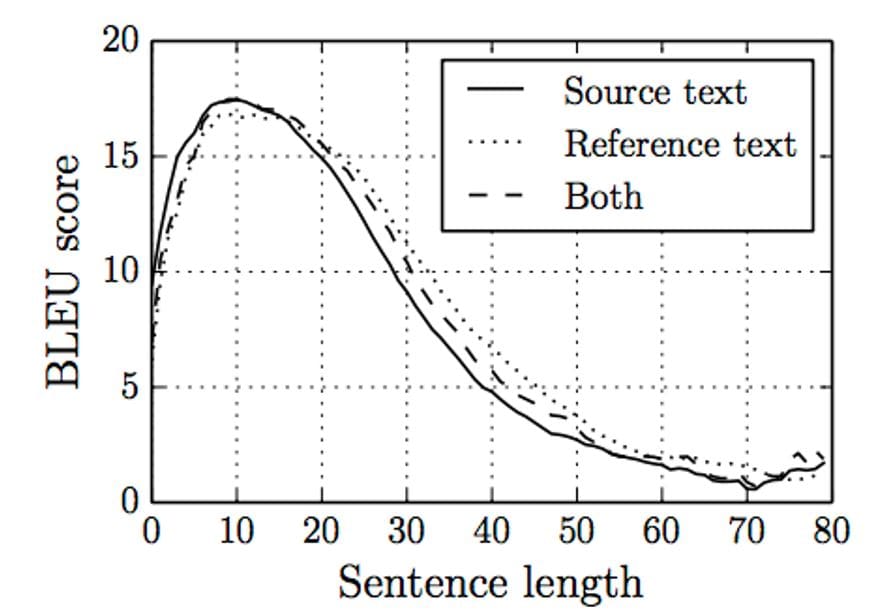

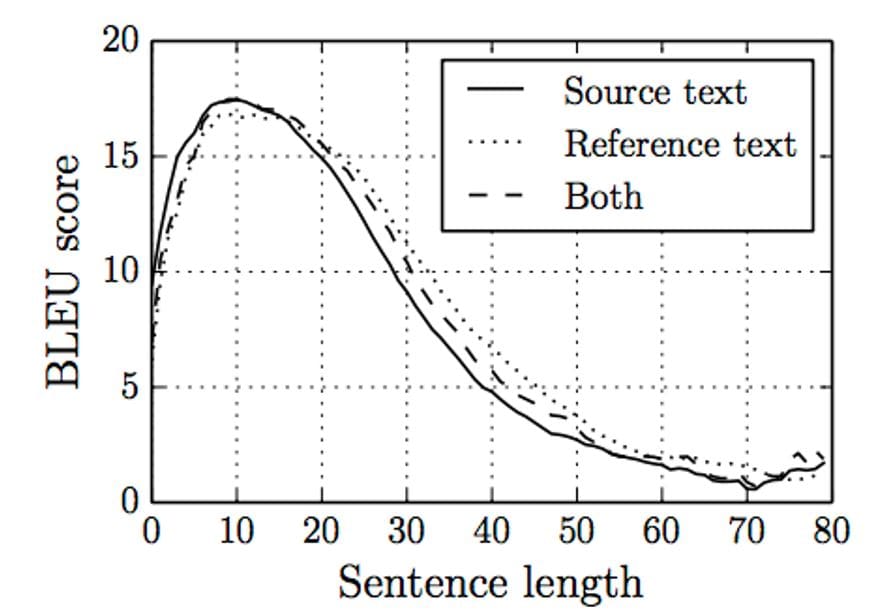

Machine Translation with Python and Transformers - learn how to build an easy pipeline for translation and to extend it to more languages.

By Synced | AI Technology & Industry Review -

2021-01-06

By Synced | AI Technology & Industry Review -

2021-01-06

A new model surpassed human baseline performance on the challenging natural language understanding benchmark.