By Medium -

2020-10-23

By Medium -

2020-10-23

Transformer Language Modeling for Akuapem and Asante Twi

By Medium -

2021-02-19

By Medium -

2021-02-19

HugginFace has been on top of every NLP(Natural Language Processing) practitioners mind with their transformers and datasets libraries. In 2020, we saw some major upgrades in both these libraries…

By Spell -

2020-12-02

By Spell -

2020-12-02

In this guest article from Fritz AI, we'll be outlining the key considerations in building a mobile-focused ML pipeline from end-to-end.

By Medium -

2021-02-22

By Medium -

2021-02-22

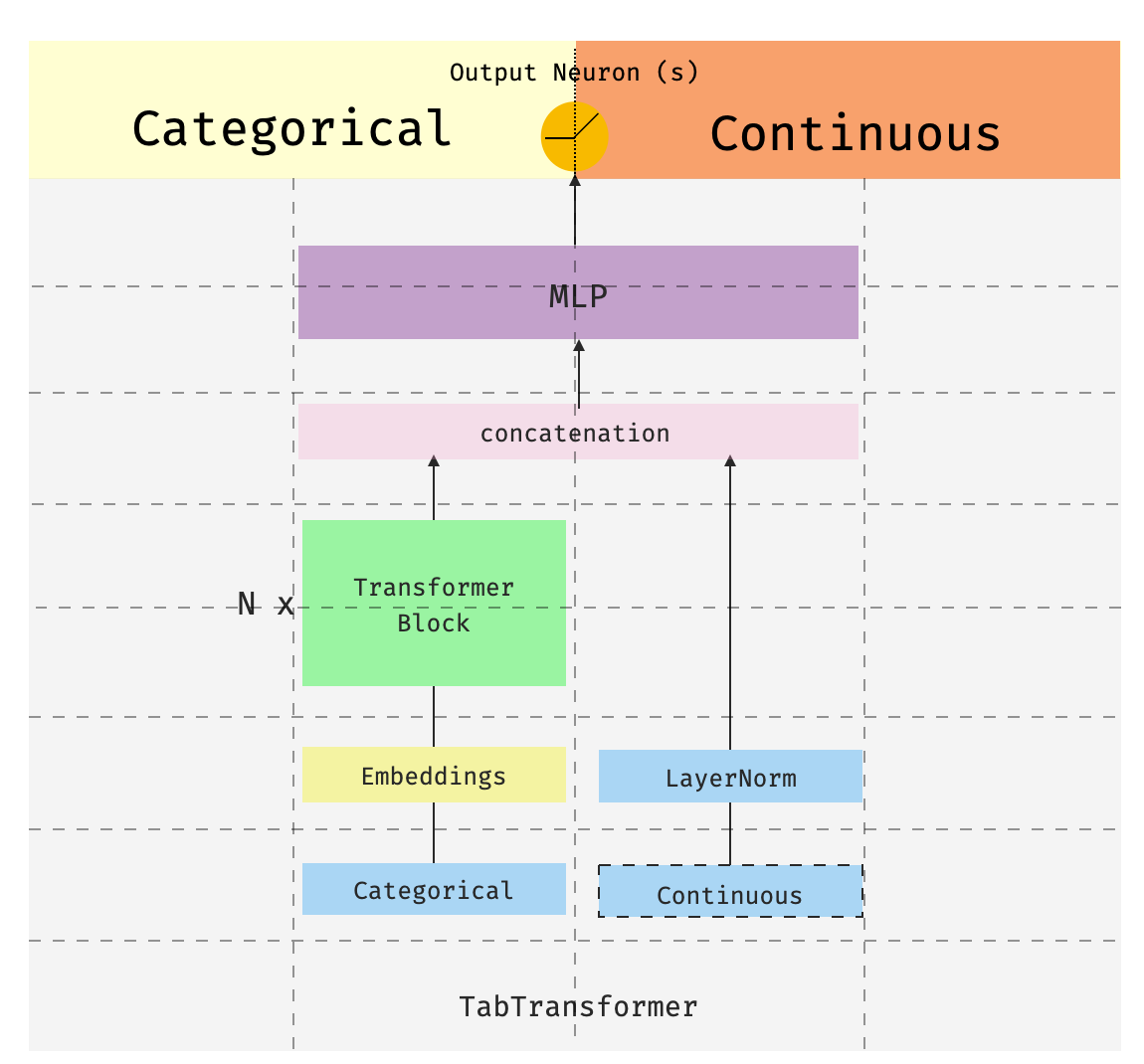

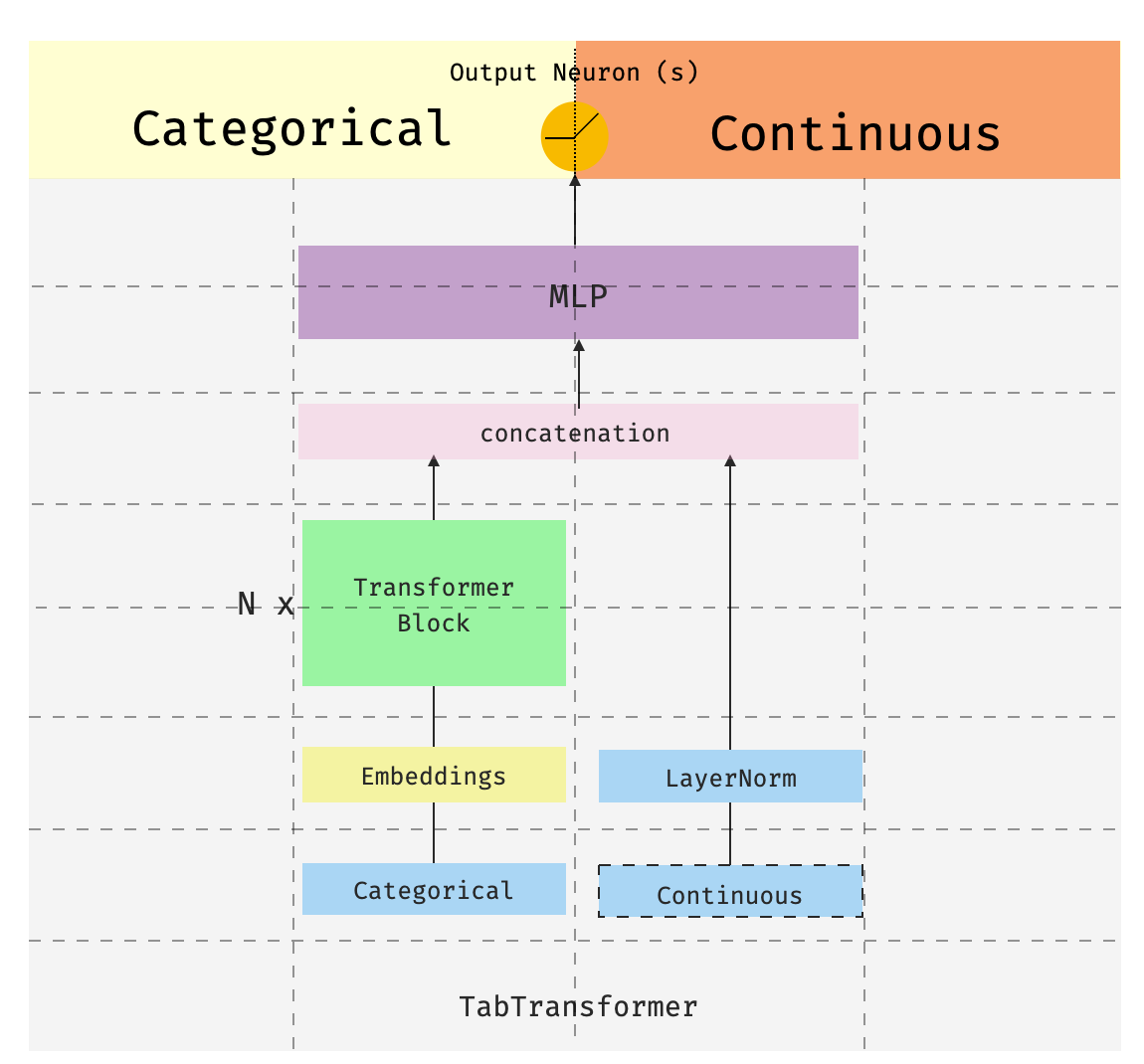

This is the third of a series of posts introducing pytorch-widedeepa flexible package to combine tabular data with text and images (that could also be used for “standard” tabular data alone). The…

By Machine Learning Mastery -

2020-09-01

By Machine Learning Mastery -

2020-09-01

AutoML refers to techniques for automatically discovering the best-performing model for a given dataset. When applied to neural networks, this involves both discovering the model architecture and the ...

By Medium -

2020-12-03

By Medium -

2020-12-03

This tutorial covers the entire ML process, from data ingestion, pre-processing, model training, hyper-parameter fitting, predicting and storing the model for later use. We will complete all these…