By Medium -

2020-12-02

By Medium -

2020-12-02

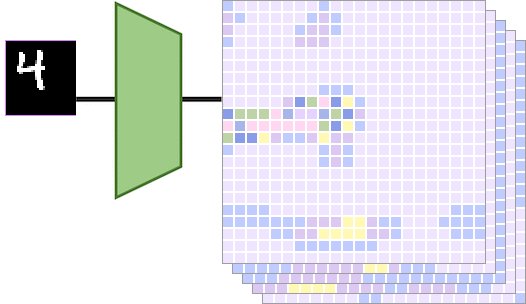

Deep Learning harnesses the power of Big Data by building deep neural architectures that try to approximate a function f(x) that can map an input, x to its corresponding label, y. The Universal…

By Medium -

2020-12-09

By Medium -

2020-12-09

In the first part of our tutorial on neural networks, we explained the basic concepts about neural networks, from the math behind them to implementing neural networks in Python without any hidden…

By Medium -

2020-07-03

By Medium -

2020-07-03

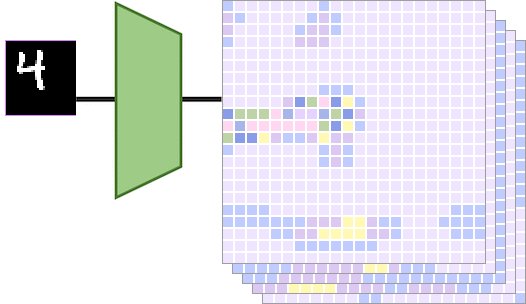

This project was made as part of Deep Learning with PyTorch: Zero to GANs course.

By datasciencecentral -

2020-12-27

By datasciencecentral -

2020-12-27

Deep Learning is a new area of Machine Learning research that has been gaining significant media interest owing to the role it is playing in artificial intel…

By KDnuggets -

2020-12-15

By KDnuggets -

2020-12-15

An extensive overview of Active Learning, with an explanation into how it works and can assist with data labeling, as well as its performance and potential limitations.

By Medium -

2020-10-05

By Medium -

2020-10-05

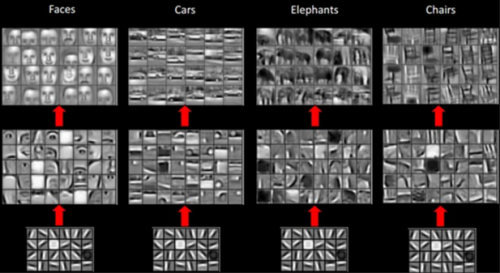

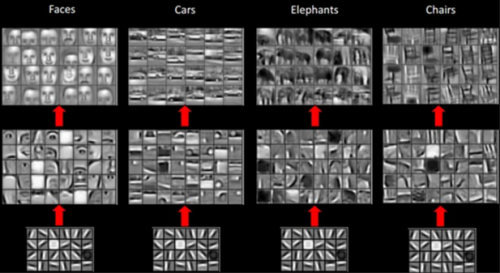

Multi-layer Perceptrons (MLPs) are standard neural networks with fully connected layers, where each input unit is connected with each output unit. We rarely use these kind of layers when working with…