By Medium -

2020-12-09

By Medium -

2020-12-09

In the first part of our tutorial on neural networks, we explained the basic concepts about neural networks, from the math behind them to implementing neural networks in Python without any hidden…

By DeepAI -

2019-05-17

By DeepAI -

2019-05-17

Batch Normalization is a supervised learning technique that converts selected inputs in a neural network layer into a standard format, called normalizing.

By datasciencecentral -

2020-12-27

By datasciencecentral -

2020-12-27

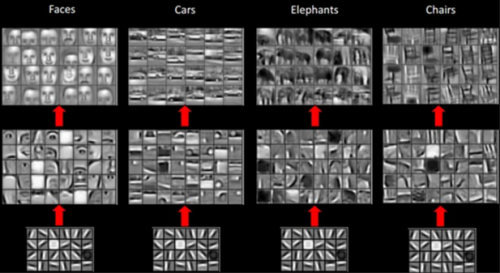

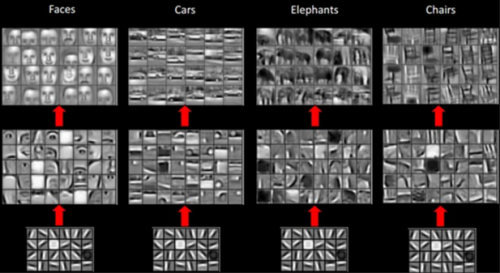

Deep Learning is a new area of Machine Learning research that has been gaining significant media interest owing to the role it is playing in artificial intel…

By Medium -

2020-10-05

By Medium -

2020-10-05

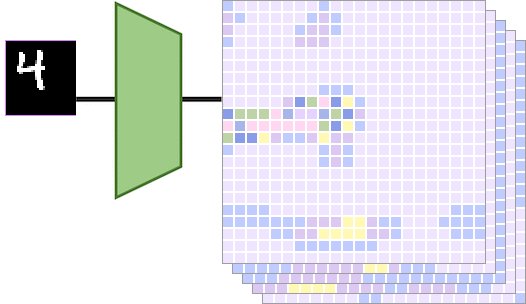

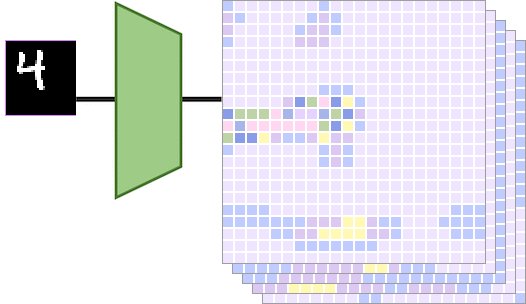

Multi-layer Perceptrons (MLPs) are standard neural networks with fully connected layers, where each input unit is connected with each output unit. We rarely use these kind of layers when working with…

By Medium -

2020-07-03

By Medium -

2020-07-03

This project was made as part of Deep Learning with PyTorch: Zero to GANs course.

By Microsoft Research -

2021-01-19

By Microsoft Research -

2021-01-19

Microsoft and CMU researchers begin to unravel 3 mysteries in deep learning related to ensemble, knowledge distillation & self-distillation. Discover how their work leads to the first theoretical proo ...