By DeepAI -

2020-11-19

By DeepAI -

2020-11-19

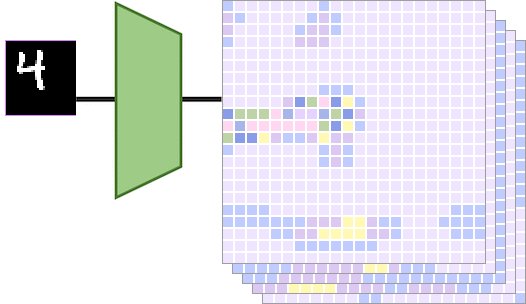

11/19/20 - We present a new generative autoencoder model with dual contradistinctive

losses to improve generative autoencoder that performs s...

By Medium -

2020-07-03

By Medium -

2020-07-03

This project was made as part of Deep Learning with PyTorch: Zero to GANs course.

By Medium -

2020-12-09

By Medium -

2020-12-09

In the first part of our tutorial on neural networks, we explained the basic concepts about neural networks, from the math behind them to implementing neural networks in Python without any hidden…

By datasciencecentral -

2020-12-27

By datasciencecentral -

2020-12-27

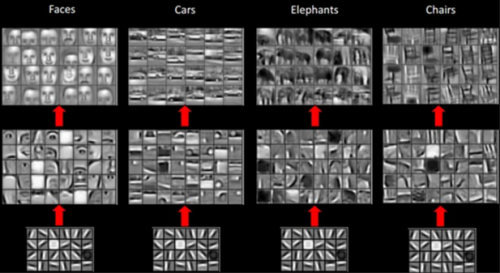

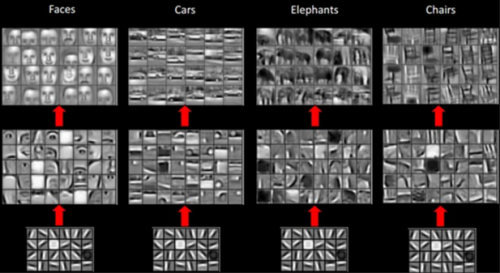

Deep Learning is a new area of Machine Learning research that has been gaining significant media interest owing to the role it is playing in artificial intel…

By Google AI Blog -

2020-12-19

By Google AI Blog -

2020-12-19

Posted by Yun-Ta Tsai 1 and Rohit Pandey, Software Engineers, Google Research Professional portrait photographers are able to create com...

By realpython -

2021-01-22

By realpython -

2021-01-22

Let's look at how to to utilize image fingerprinting to perform near-duplicate image detection.