By Synced | AI Technology & Industry Review -

2021-01-06

By Synced | AI Technology & Industry Review -

2021-01-06

A new model surpassed human baseline performance on the challenging natural language understanding benchmark.

By The Gradient -

2020-11-21

By The Gradient -

2020-11-21

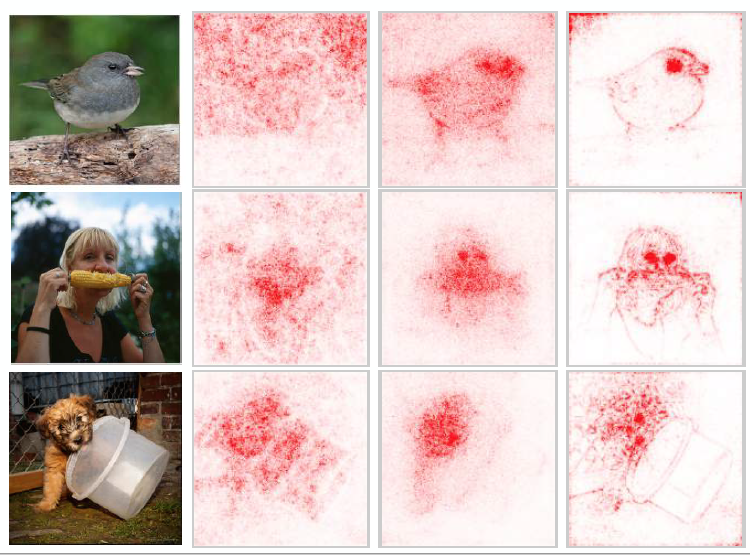

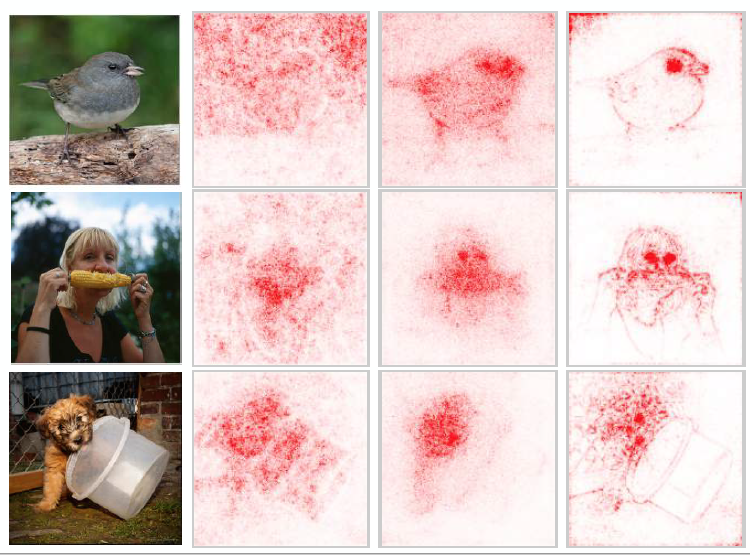

A broad overview of the sub-field of machine learning interpretability; conceptual frameworks, existing research, and future directions.

By Machine Learning Mastery -

2020-09-01

By Machine Learning Mastery -

2020-09-01

AutoML refers to techniques for automatically discovering the best-performing model for a given dataset. When applied to neural networks, this involves both discovering the model architecture and the ...

By Medium -

2021-02-16

By Medium -

2021-02-16

If you have worked with any kind of forecasting models, you will know how laborious it can be at times especially when trying to predict multiple variables. From identifying if a time-series is…

By Google AI Blog -

2020-11-20

By Google AI Blog -

2020-11-20

Posted by James Wexler, Software Developer and Ian Tenney, Software Engineer, Google Research As natural language processing (NLP) models...

By Medium -

2021-02-22

By Medium -

2021-02-22

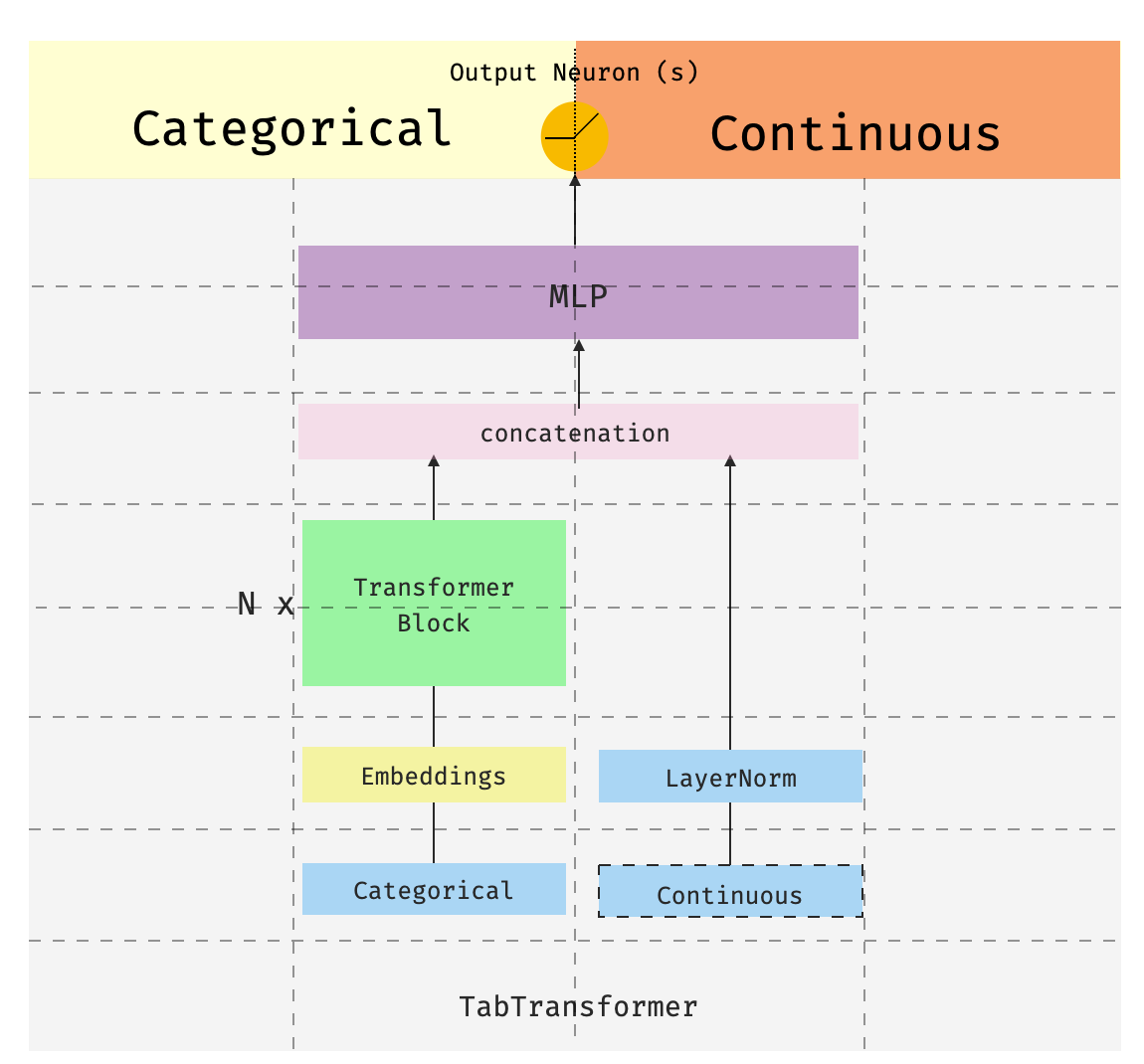

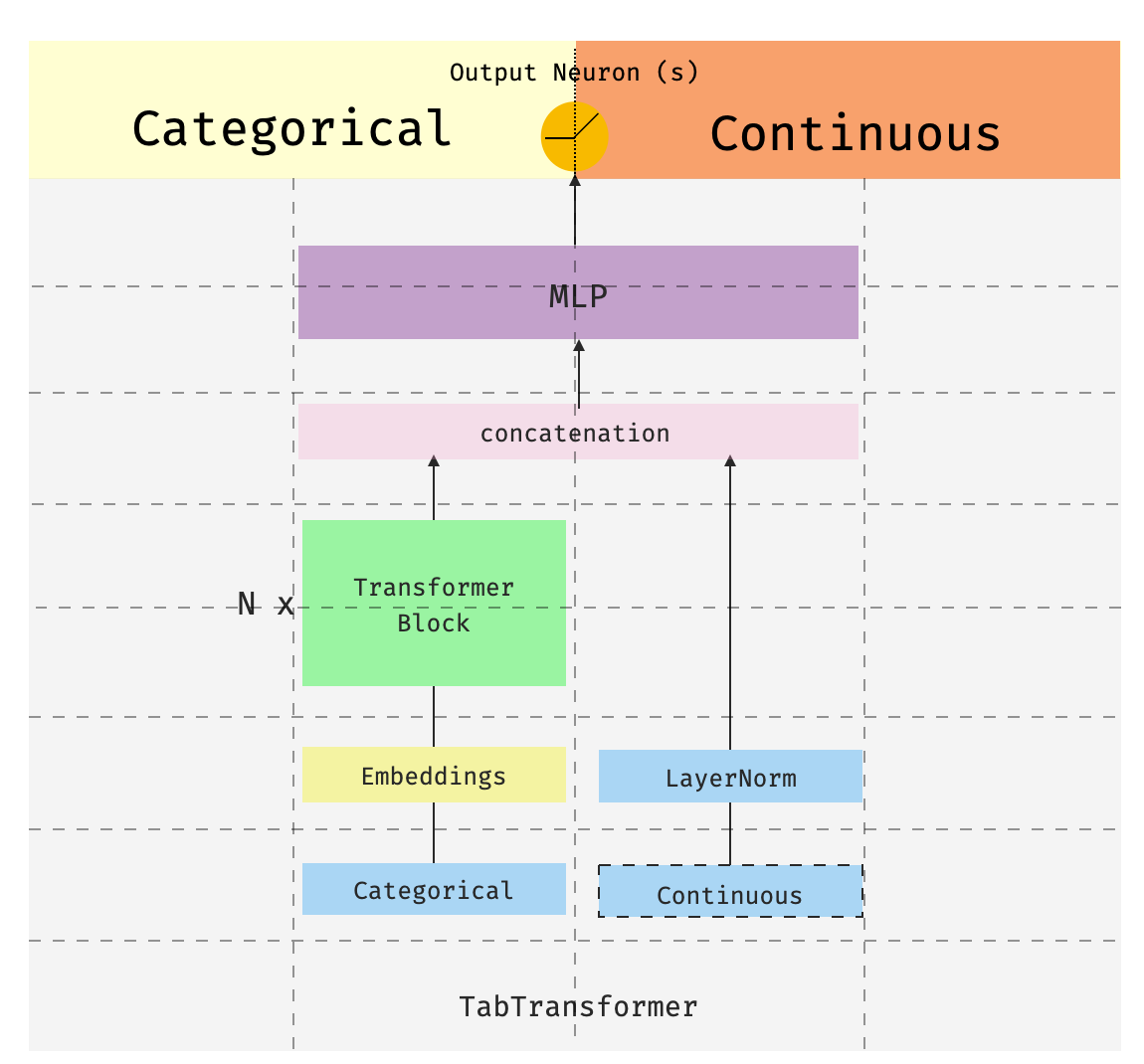

This is the third of a series of posts introducing pytorch-widedeepa flexible package to combine tabular data with text and images (that could also be used for “standard” tabular data alone). The…