AI 360: 01/03/2021. Unified Transformer, Sebastian Ruder, OpenAI's DALL-E, GLOM and StudioGAN

By lamaai - 2021-03-02

Topics

- NLP (0.27)

- Machine_Learning (0.17)

- UX (0.09)

Similar Articles

Google trained a trillion-parameter AI language model

By VentureBeat - 2021-01-12Researchers at Google claim to have trained a natural language model containing over a trillion parameters.

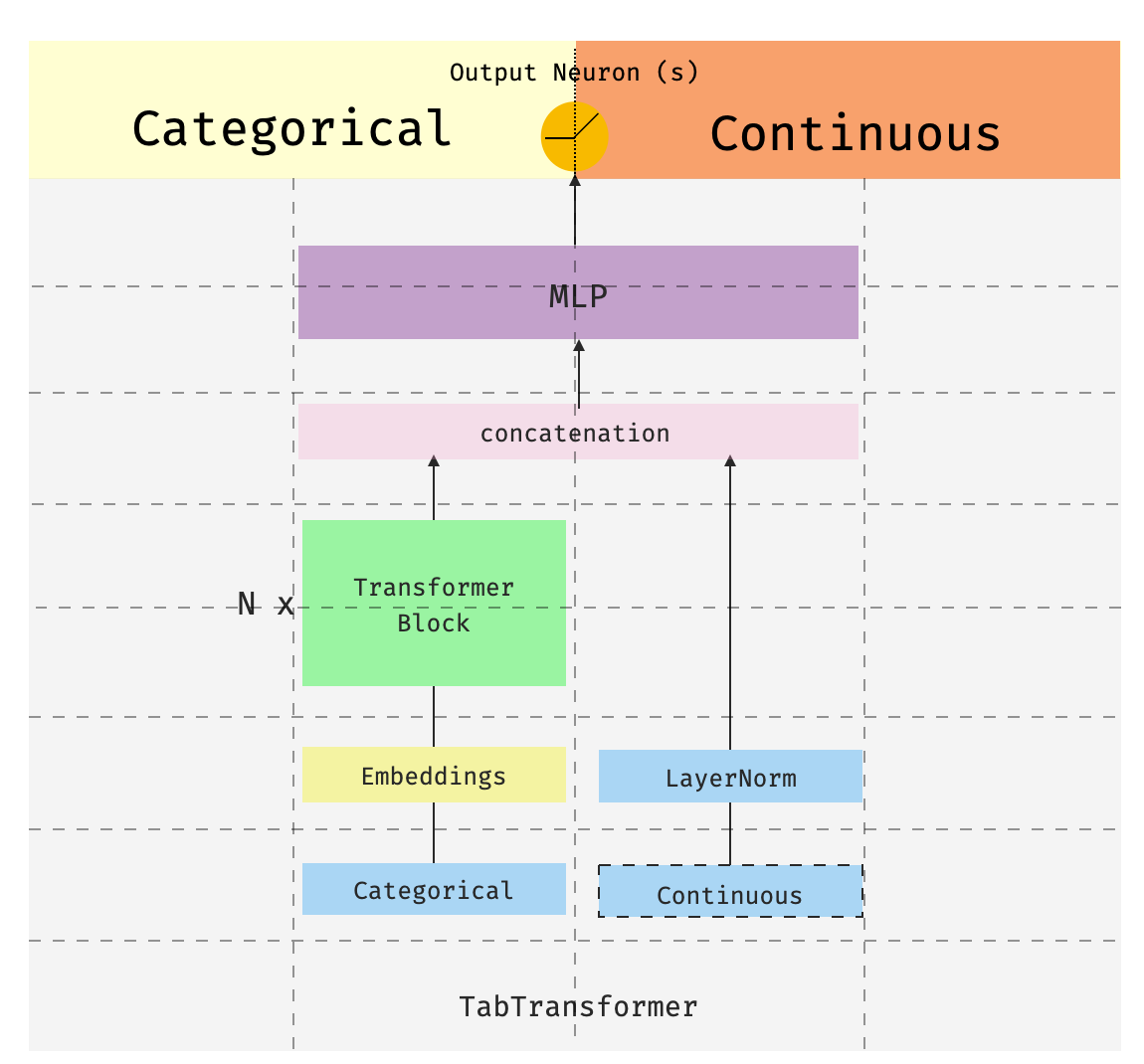

pytorch-widedeep: deep learning for tabular data

By Medium - 2021-02-22This is the third of a series of posts introducing pytorch-widedeepa flexible package to combine tabular data with text and images (that could also be used for “standard” tabular data alone). The…

Introducing ABENA: BERT Natural Language Processing for Twi

By Medium - 2020-10-23Transformer Language Modeling for Akuapem and Asante Twi

Interpretability, Explainability, and Machine Learning – What Data Scientists Need to Know

By KDnuggets - 2020-11-04The terms “interpretability,” “explainability” and “black box” are tossed about a lot in the context of machine learning, but what do they really mean, and why do they matter?

7 models on HuggingFace you probably didn’t know existed

By Medium - 2021-02-19HugginFace has been on top of every NLP(Natural Language Processing) practitioners mind with their transformers and datasets libraries. In 2020, we saw some major upgrades in both these libraries…

FastFormers: 233x Faster Transformers inference on CPU

By Medium - 2020-11-04Since the birth of BERT followed by that of Transformers have dominated NLP in nearly every language-related tasks whether it is Question-Answering, Sentiment Analysis, Text classification or Text…