By Medium -

2021-01-04

By Medium -

2021-01-04

A groundbreaking and relatively new discovery upends classical statistics with relevant implications for data science practitioners and…

By KDnuggets -

2020-11-20

By KDnuggets -

2020-11-20

Data science work typically requires a big lift near the end to increase the accuracy of any model developed. These five recommendations will help improve your machine learning models and help your pr ...

By Medium -

2021-02-02

By Medium -

2021-02-02

How to outperform the benchmark in clothes recognition with fastai and DeepFashion Dataset. How to use fastai models in PyTorch. Code, explanation, evaluation on the user data.

By Medium -

2021-03-18

By Medium -

2021-03-18

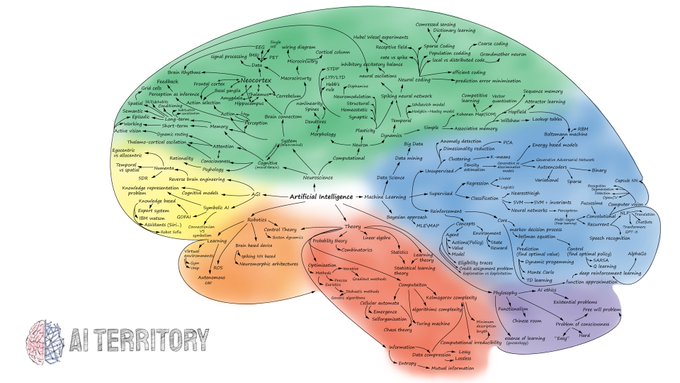

Machine Learning is the path to a better and advanced future. A Machine Learning Developer is the most demanding job in 2021 and it is going to increase by 20–30% in the upcoming 3–5 years. Machine…

By The Gradient -

2020-11-21

By The Gradient -

2020-11-21

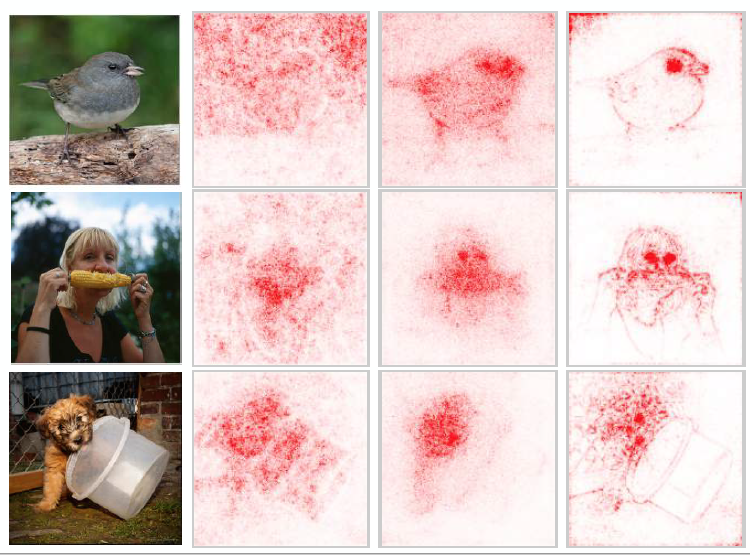

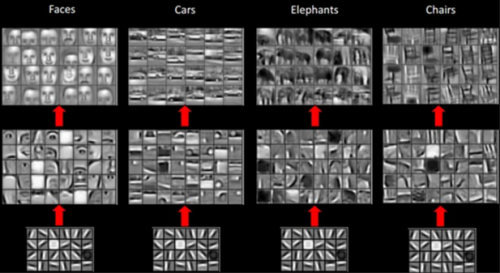

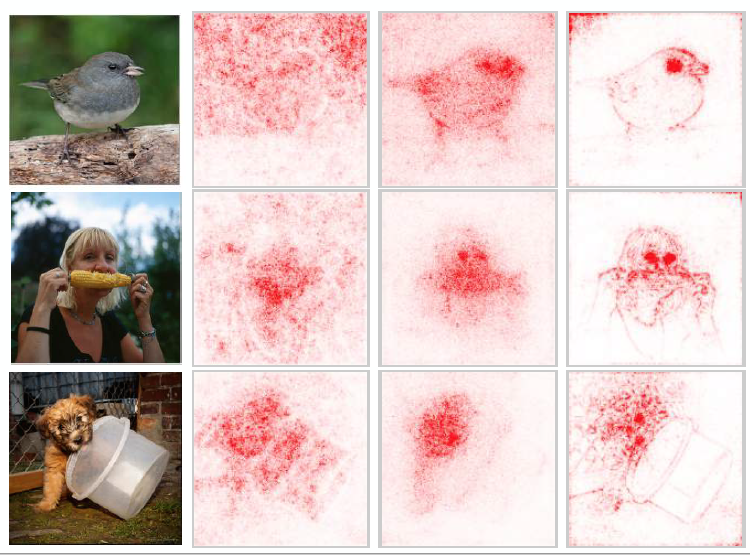

A broad overview of the sub-field of machine learning interpretability; conceptual frameworks, existing research, and future directions.

By Machine Learning Mastery -

2019-01-17

By Machine Learning Mastery -

2019-01-17

Batch normalization is a technique designed to automatically standardize the inputs to a layer in a deep learning neural network. Once implemented, batch normalization has the effect of dramatically a ...