By Medium -

2020-11-05

By Medium -

2020-11-05

Here is what you tell them.

By The Gradient -

2020-11-21

By The Gradient -

2020-11-21

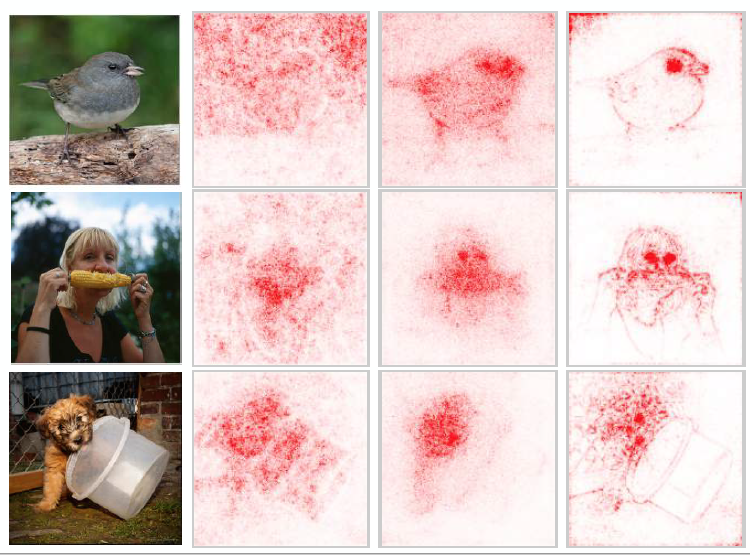

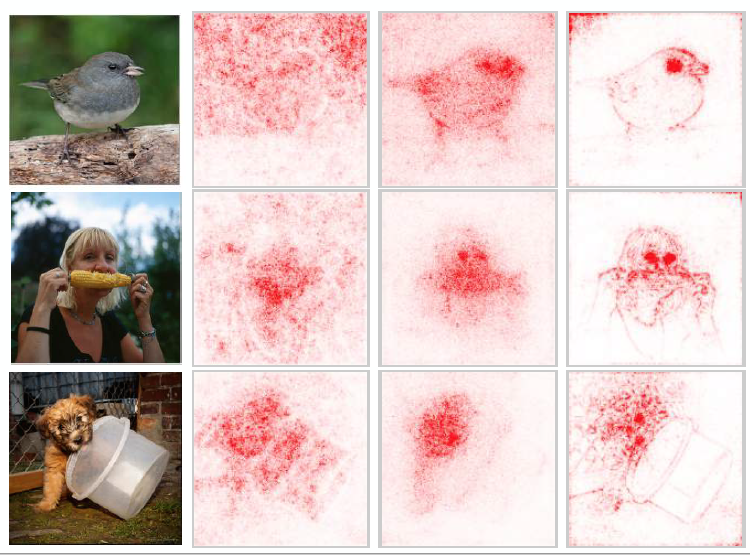

A broad overview of the sub-field of machine learning interpretability; conceptual frameworks, existing research, and future directions.

By Google AI Blog -

2020-11-20

By Google AI Blog -

2020-11-20

Posted by James Wexler, Software Developer and Ian Tenney, Software Engineer, Google Research As natural language processing (NLP) models...

By Spell -

2020-12-02

By Spell -

2020-12-02

In this guest article from Fritz AI, we'll be outlining the key considerations in building a mobile-focused ML pipeline from end-to-end.

By Medium -

2020-10-23

By Medium -

2020-10-23

Transformer Language Modeling for Akuapem and Asante Twi

By Google AI Blog -

2021-03-09

By Google AI Blog -

2021-03-09

Posted by Artsiom Ablavatski and Marat Dukhan, Software Engineers, Google Research On-device inference of neural networks enables a var...