By Google AI Blog -

2020-11-20

By Google AI Blog -

2020-11-20

Posted by James Wexler, Software Developer and Ian Tenney, Software Engineer, Google Research As natural language processing (NLP) models...

By Psychology Today -

2021-02-09

By Psychology Today -

2021-02-09

MovieBERT, a new AI machine learning tool, can rate movie content in seconds—in advance of any film production.

By VentureBeat -

2021-01-12

By VentureBeat -

2021-01-12

Researchers at Google claim to have trained a natural language model containing over a trillion parameters.

By nature -

2021-03-03

By nature -

2021-03-03

A remarkable AI can write like humans — but with no understanding of what it’s saying.

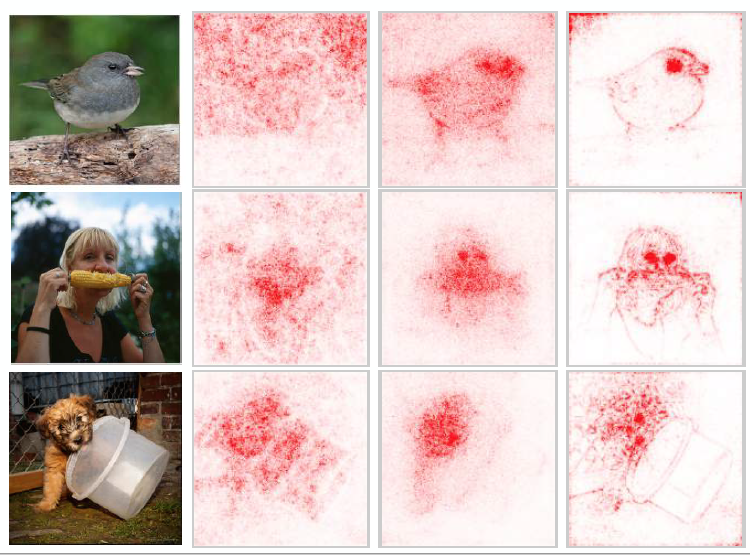

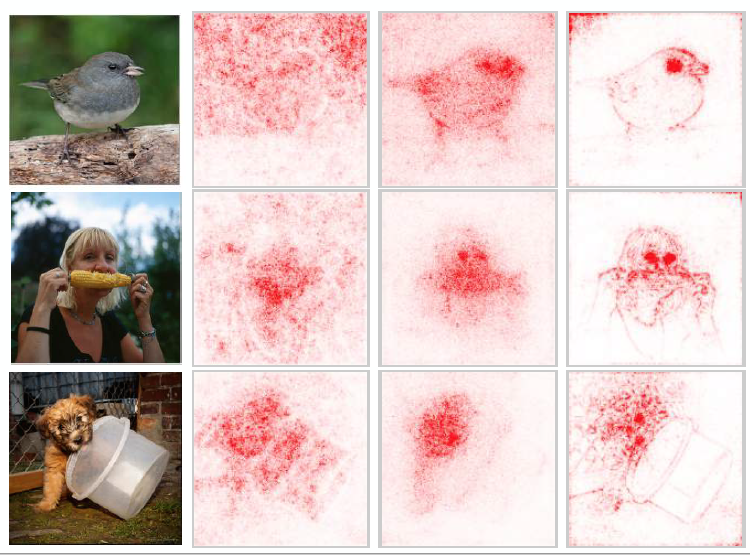

By The Gradient -

2020-11-21

By The Gradient -

2020-11-21

A broad overview of the sub-field of machine learning interpretability; conceptual frameworks, existing research, and future directions.

By facebook -

2021-03-10

By facebook -

2021-03-10

Teaching computers to understand how humans write and speak, known as natural language processing or NLP, is one of the oldest challenges in AI research....