By arXiv.org -

2020-10-23

By arXiv.org -

2020-10-23

The recent "Text-to-Text Transfer Transformer" (T5) leveraged a unified

text-to-text format and scale to attain state-of-the-art results on a wide

variety of English-language NLP tasks. In this paper, ...

By arXiv.org -

2020-10-08

By arXiv.org -

2020-10-08

Multilingual pre-trained Transformers, such as mBERT (Devlin et al., 2019)

and XLM-RoBERTa (Conneau et al., 2020a), have been shown to enable the

effective cross-lingual zero-shot transfer. However, t ...

By arXiv.org -

2021-02-28

By arXiv.org -

2021-02-28

Modern natural language processing (NLP) methods employ self-supervised

pretraining objectives such as masked language modeling to boost the

performance of various application tasks. These pretraining ...

By Medium -

2020-12-08

By Medium -

2020-12-08

As you know, data science, and more specifically machine learning, is very much en vogue now, so guess what? I decided to enroll in a MOOC to become fluent in data science. But when you start with a…

By arXiv.org -

2020-10-01

By arXiv.org -

2020-10-01

Benchmarks such as GLUE have helped drive advances in NLP by incentivizing

the creation of more accurate models. While this leaderboard paradigm has been

remarkably successful, a historical focus on p ...

By arXiv.org -

2020-10-15

By arXiv.org -

2020-10-15

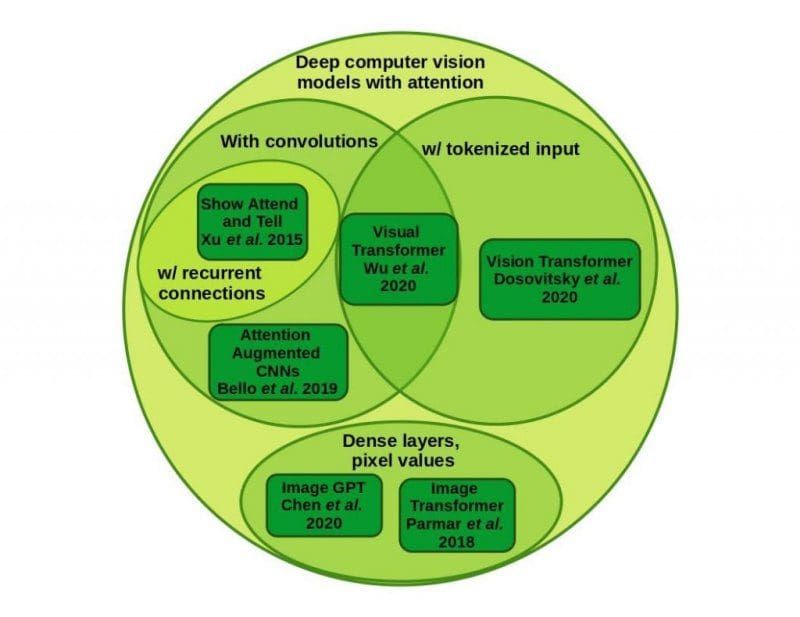

Humans learn language by listening, speaking, writing, reading, and also, via

interaction with the multimodal real world. Existing language pre-training

frameworks show the effectiveness of text-only ...