By Medium -

2021-03-10

By Medium -

2021-03-10

Sentiment analysis is typically limited by the length of text that can be processed by transformer models like BERT. We will learn how to work around this.

By Medium -

2021-01-17

By Medium -

2021-01-17

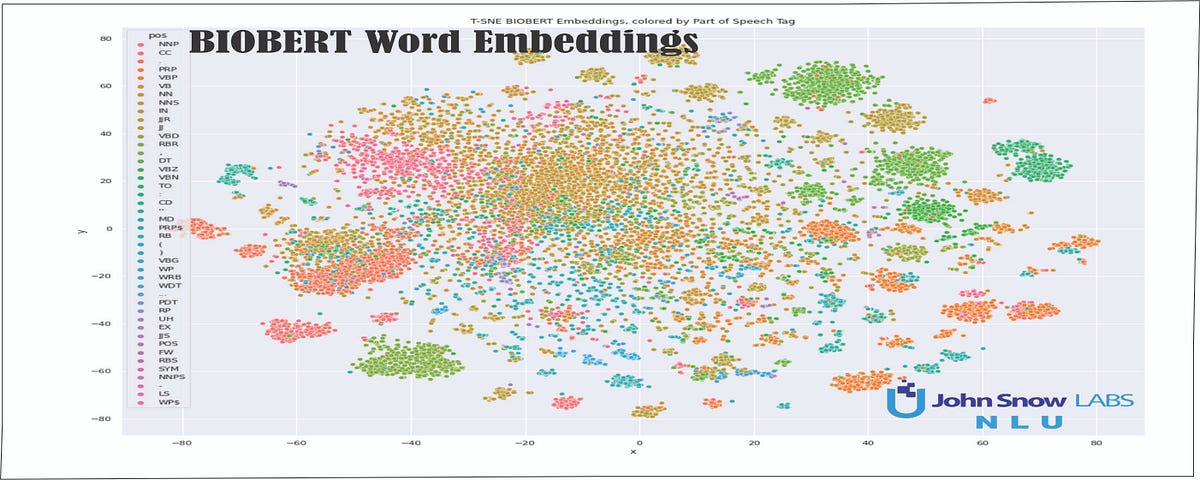

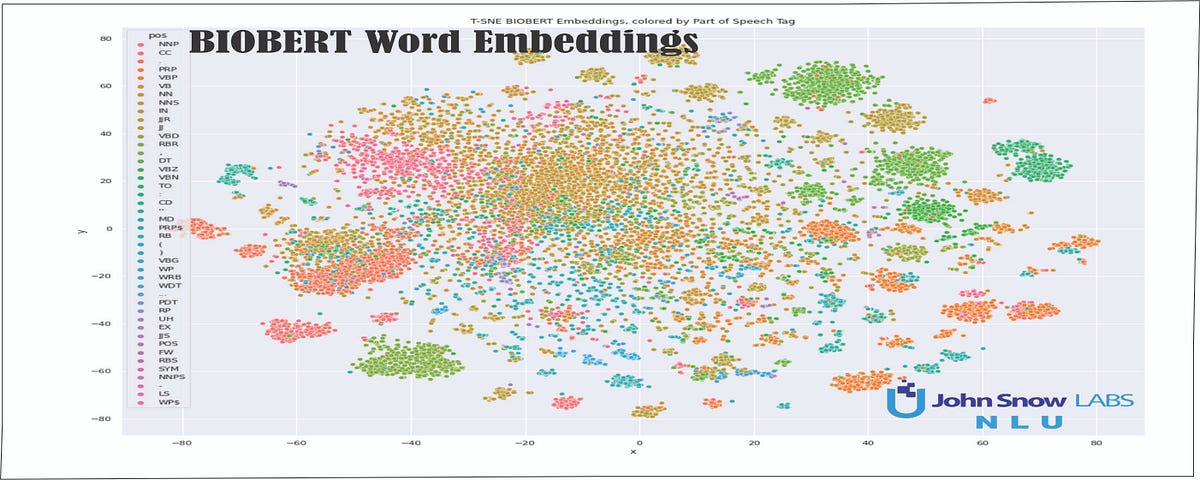

Including Part of Speech, Named Entity Recognition, Emotion Classification in the same line! With Bonus t-SNE plots! John Snow Labs NLU library gives you 1000+ NLP models and 100+ Word Embeddings in…

By DataCamp Community -

2021-02-05

By DataCamp Community -

2021-02-05

PYTHON for FINANCE introduces you to ALGORITHMIC TRADING, time-series data, and other common financial analyses!

By Compose Articles -

2017-07-25

By Compose Articles -

2017-07-25

In his latest Compose Write Stuff article on Mastering PostgreSQL Tools, Lucero Del Alba writes about mastering full-text and phrase search in PostgreSQL 9.6. Yes, PostgreSQL 9.6 has been finally roll ...

By Medium -

2021-01-26

By Medium -

2021-01-26

Text preprocessing on GPUs is coming to RAPIDS cuML! This is very exciting as efficient string operations are known to be a difficult problem with GPUs. Based on the work by the RAPIDS cuDF team…

By realpython -

2020-12-15

By realpython -

2020-12-15

Once you learn about for loops in Python, you know that using an index to access items in a sequence isn't very Pythonic. So what do you do when you need that index value? In this tutorial, you'll lea ...