By Medium -

2021-02-01

By Medium -

2021-02-01

Exploring the advantages and pitfalls of 9 common distance measures used in Machine Learning applications.

By Medium -

2020-12-28

By Medium -

2020-12-28

Why tf-idf sometimes fails to accurately capture word importance, and what we can use instead

By hackernoon -

2021-02-19

By hackernoon -

2021-02-19

First, we will extract the docx archive. Next, we will read and map the file word/document.xml to a Java object.

By Google Cloud Blog -

2020-12-27

By Google Cloud Blog -

2020-12-27

Document AI Platform is a unified console for document processing in the cloud.

By KDnuggets -

2021-03-11

By KDnuggets -

2021-03-11

CLIP is a bridge between computer vision and natural language processing. I'm here to break CLIP down for you in an accessible and fun read! In this post, I'll cover what CLIP is, how CLIP works, and ...

By Distill -

2021-02-27

By Distill -

2021-02-27

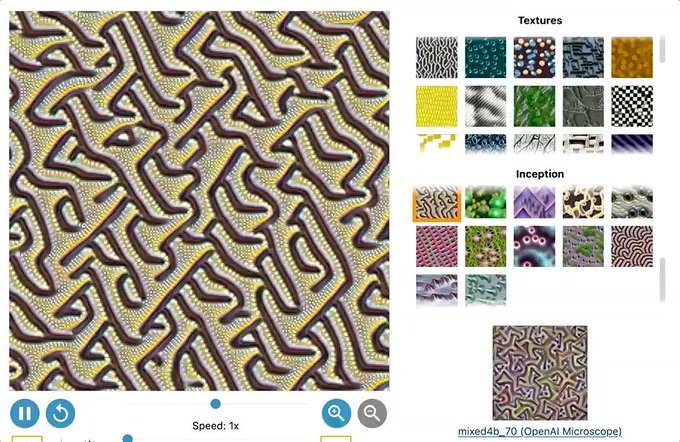

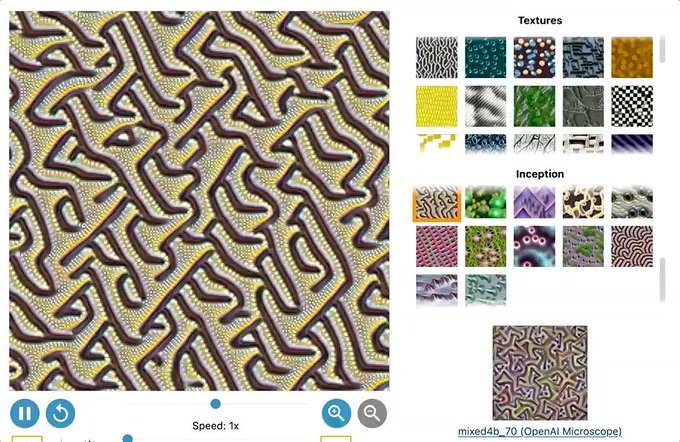

Neural Cellular Automata learn to generate textures, exhibiting surprising properties.