By Medium -

2021-01-15

By Medium -

2021-01-15

I recently came across the article “How HDBSCAN works” by Leland McInnes, and I was struck by the informative, accessible way he explained…

By The Gradient -

2020-11-21

By The Gradient -

2020-11-21

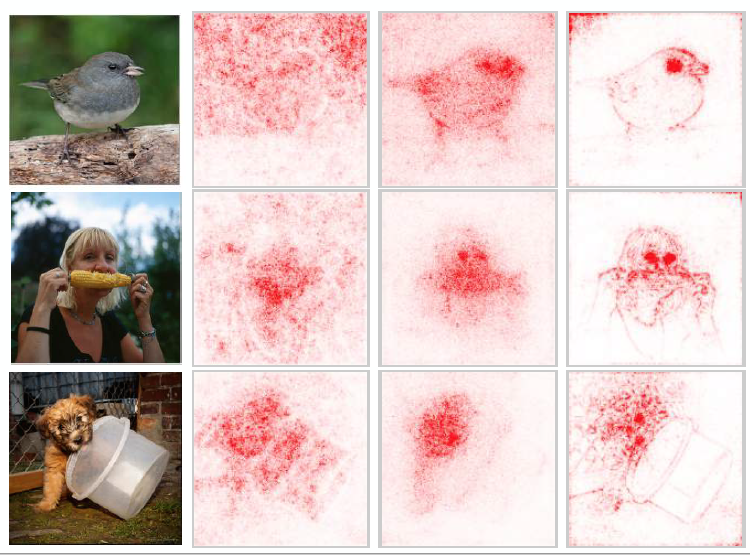

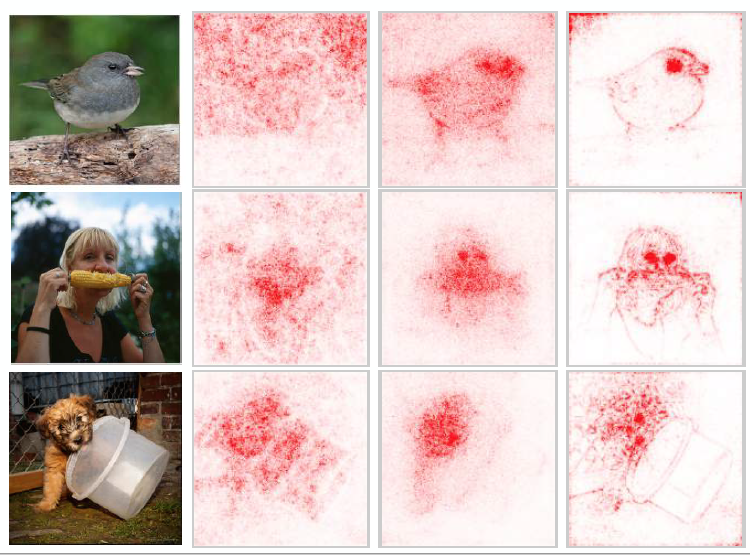

A broad overview of the sub-field of machine learning interpretability; conceptual frameworks, existing research, and future directions.

By Medium -

2020-12-14

By Medium -

2020-12-14

What is it, how does it help, tools used and an experiment

By Distill -

2021-02-27

By Distill -

2021-02-27

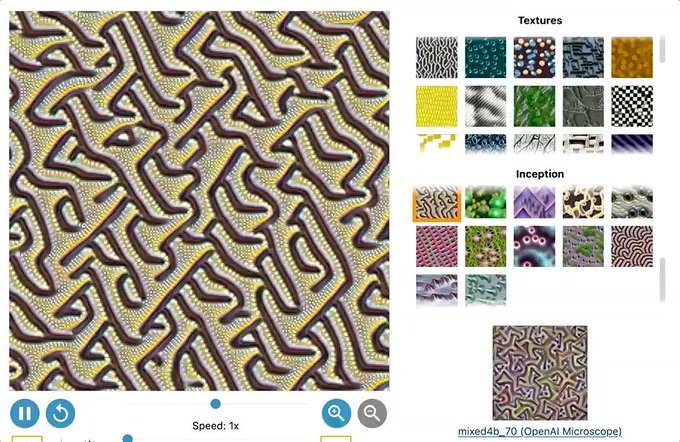

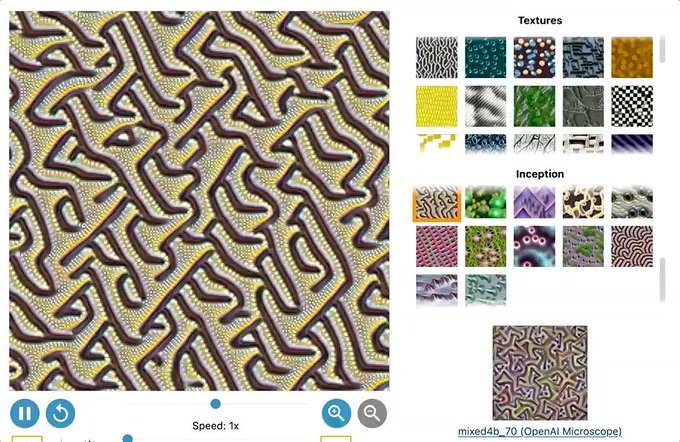

Neural Cellular Automata learn to generate textures, exhibiting surprising properties.

By Bram.us -

2021-03-09

By Bram.us -

2021-03-09

Let's take a look at how we can create Scroll-Linked Animations that use Element-based Offsets using @scroll-timeline from the “Scroll-linked Animations“ CSS Specification.

By Medium -

2020-12-10

By Medium -

2020-12-10

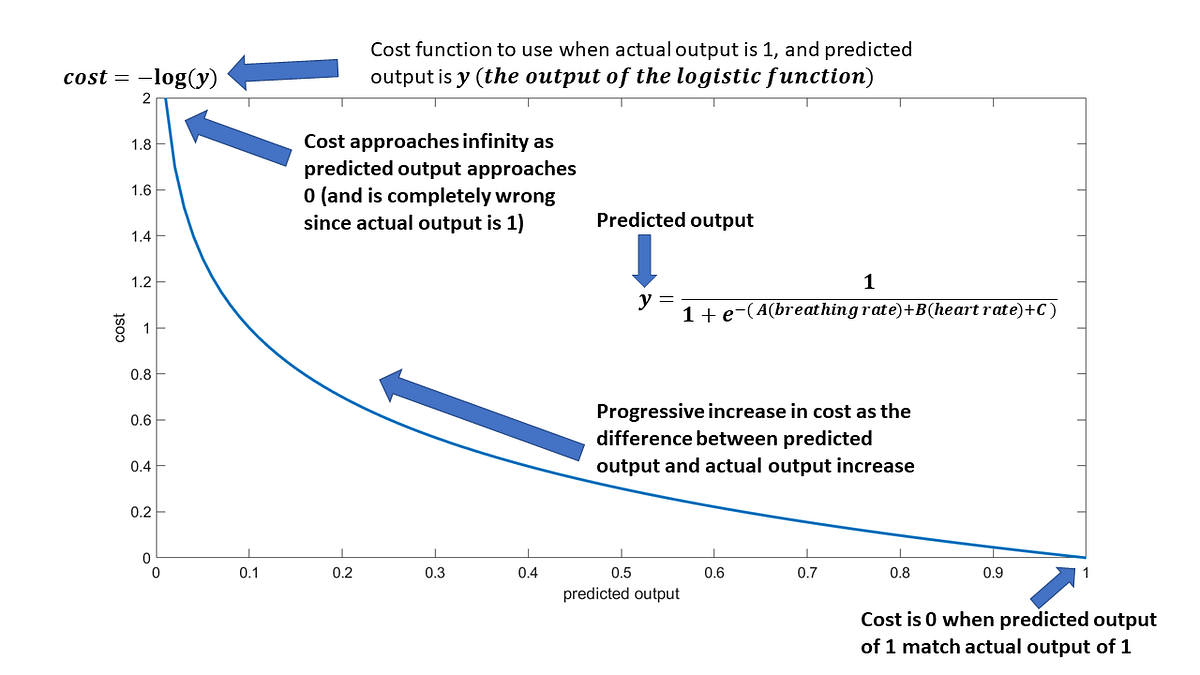

A/B testing is a tool that allows to check whether a certain causal relationship holds. For example, a data scientist working for an e-commerce platform might want to increase the revenue by…