By Machine Learning Mastery -

2020-10-04

By Machine Learning Mastery -

2020-10-04

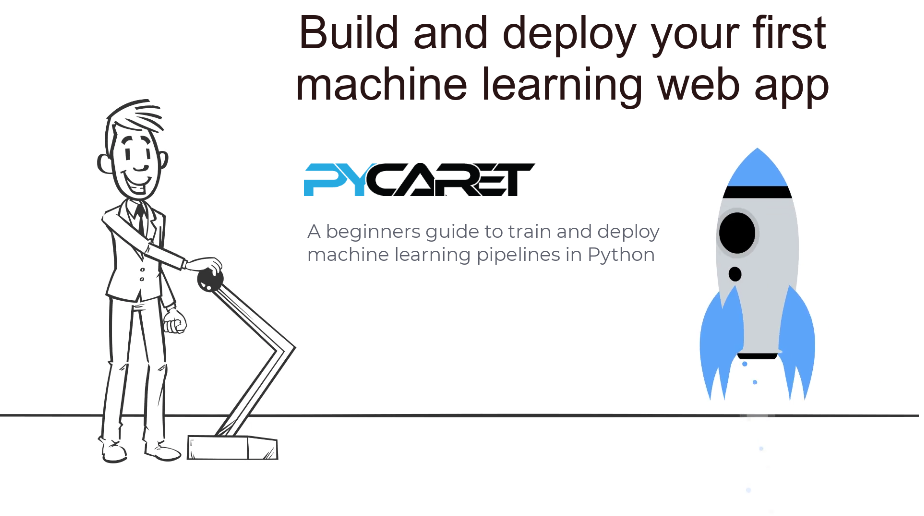

Regression is a modeling task that involves predicting a numerical value given an input. Algorithms used for regression tasks are also referred to as “regression” algorithms, with the most widely know ...

By R-bloggers -

2020-12-24

By R-bloggers -

2020-12-24

Linear Regression with R Chances are you had some prior exposure to machine learning and statistics. Basically, that's all linear regression is – a simple

By KDnuggets -

2021-01-05

By KDnuggets -

2021-01-05

This post aims to make you get started with putting your trained machine learning models into production using Flask API.

By Medium -

2021-01-31

By Medium -

2021-01-31

Electricity demand forecasting is vital for any organization that operates and/or is impacted by the electricity market. Electricity storage technologies have not caught up to accommodate the current…

By Medium -

2021-01-04

By Medium -

2021-01-04

A groundbreaking and relatively new discovery upends classical statistics with relevant implications for data science practitioners and…

By Medium -

2020-12-21

By Medium -

2020-12-21

Over 70% of the world’s fresh water is used for irrigation and naturally, there is a huge demand for accurate estimates and metrics that could aid sensible water use in the agricultural sector…