By huggingface -

2021-03-12

By huggingface -

2021-03-12

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

By MachineCurve -

2021-02-02

By MachineCurve -

2021-02-02

Explanations and code examples showing you how to use K-fold Cross Validation for Machine Learning model evaluation/testing with PyTorch.

By MachineCurve -

2021-02-15

By MachineCurve -

2021-02-15

Machine Translation with Python and Transformers - learn how to build an easy pipeline for translation and to extend it to more languages.

By Medium -

2020-12-10

By Medium -

2020-12-10

While learning about time series forecasting, sooner or later you will encounter the vastly popular Prophet model, developed by Facebook. It gained lots of popularity due to the fact that it provides…

By Medium -

2021-02-04

By Medium -

2021-02-04

When we start learning how to build deep neural networks with Keras, the first method we use to input data is simply loading it into NumPy arrays. At some point, especially when working with images…

By Medium -

2020-12-08

By Medium -

2020-12-08

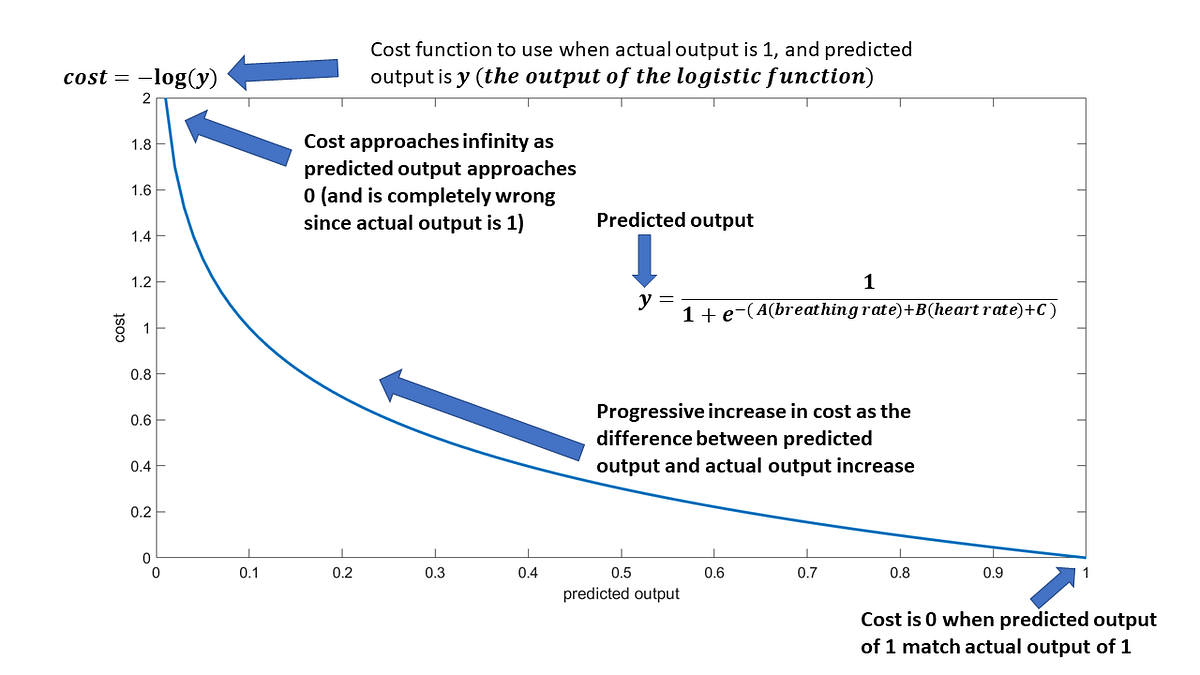

As you know, data science, and more specifically machine learning, is very much en vogue now, so guess what? I decided to enroll in a MOOC to become fluent in data science. But when you start with a…