By realpython -

2021-02-02

By realpython -

2021-02-02

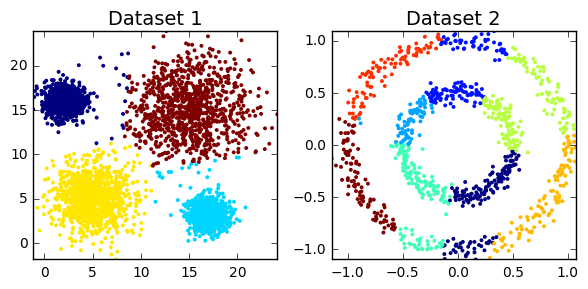

In this tutorial, you'll learn what the stochastic gradient descent algorithm is, how it works, and how to implement it with Python and NumPy.

By Code Wall -

2019-02-10

By Code Wall -

2019-02-10

In this last article about MongoDB, we are going to complete our knowledge about how to write queries using this technology. In particular, we are going to focus on how […]

By Medium -

2020-12-13

By Medium -

2020-12-13

In my today’s medium post, I will teach you how to implement the Q-Learning algorithm. But before that, I will first explain the idea behind Q-Learning and its limitation. Please be sure to have some…

By Medium -

2021-01-18

By Medium -

2021-01-18

A practical deep dive into GPU Accelerated Python ML in cross-vendor graphics cards (AMD, Qualcomm, NVIDIA & friends) using Vulkan Kompute

By DataCamp Community -

2021-02-05

By DataCamp Community -

2021-02-05

PYTHON for FINANCE introduces you to ALGORITHMIC TRADING, time-series data, and other common financial analyses!

By huggingface -

2021-03-12

By huggingface -

2021-03-12

We’re on a journey to advance and democratize artificial intelligence through open source and open science.