By arXiv.org -

2020-10-15

By arXiv.org -

2020-10-15

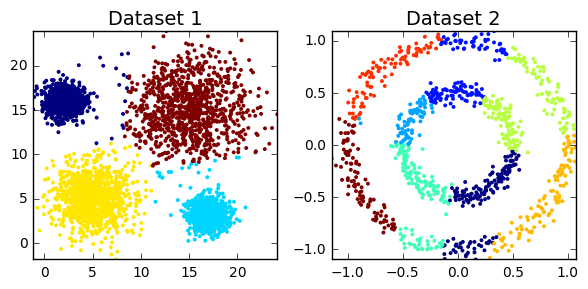

Humans learn language by listening, speaking, writing, reading, and also, via

interaction with the multimodal real world. Existing language pre-training

frameworks show the effectiveness of text-only ...

By MIT News | Massachusetts Institute of Technology -

2020-10-21

By MIT News | Massachusetts Institute of Technology -

2020-10-21

MIT researchers have created a machine learning system that aims to help linguists decipher lost languages.

By Medium -

2020-12-14

By Medium -

2020-12-14

We’re constantly communicating at work, even when we’re not speaking

By Velasticity Newsletter -

2021-02-05

By Velasticity Newsletter -

2021-02-05

On October 14th, 2020, researchers from OpenAI, the Stanford Institute for

Human-Centered Artificial Intelligence, and other universities convened to

discuss open research questions surrounding GPT-3,

By inDepthDev -

2021-01-24

By inDepthDev -

2021-01-24

Angular CLI schematics offer us a way to add, scaffold and update app-related files and modules. You'll be guided through some common but currently undocumented helper functions you can use to achieve ...

By DEV Community -

2020-12-30

By DEV Community -

2020-12-30

React.js is currently the most popular JavaScript library for front end developers. Invented by Faceb... Tagged with react, javascript, webdev, beginners.