By KDnuggets -

2020-11-04

By KDnuggets -

2020-11-04

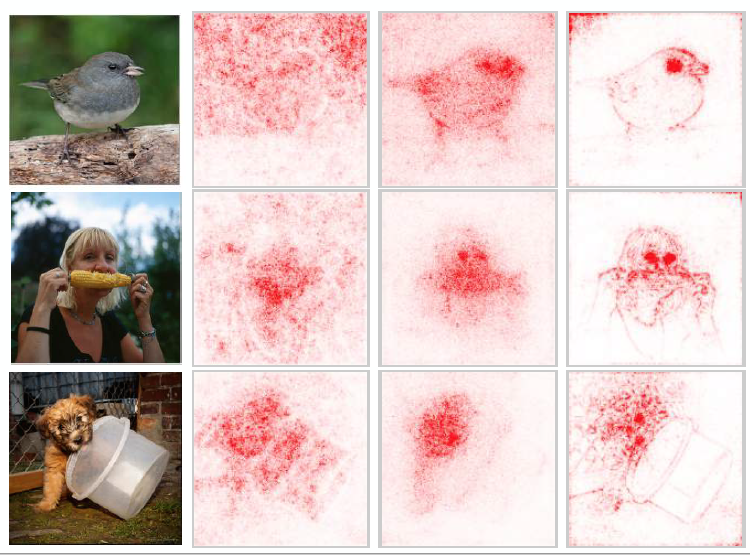

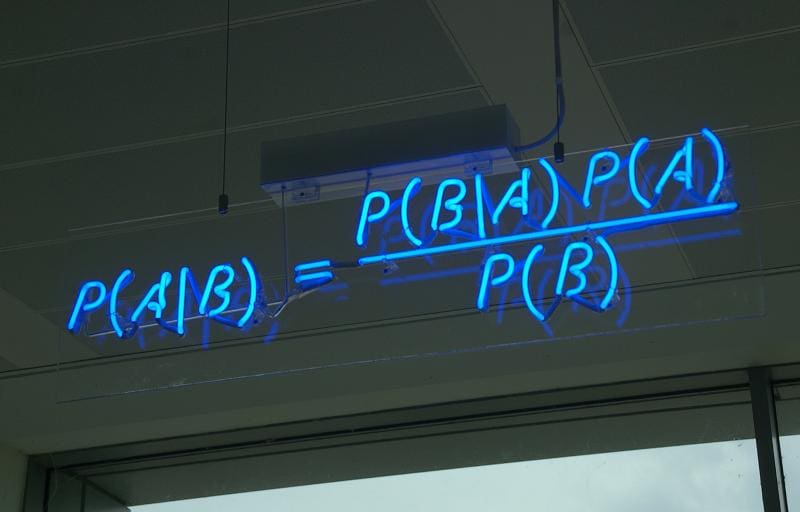

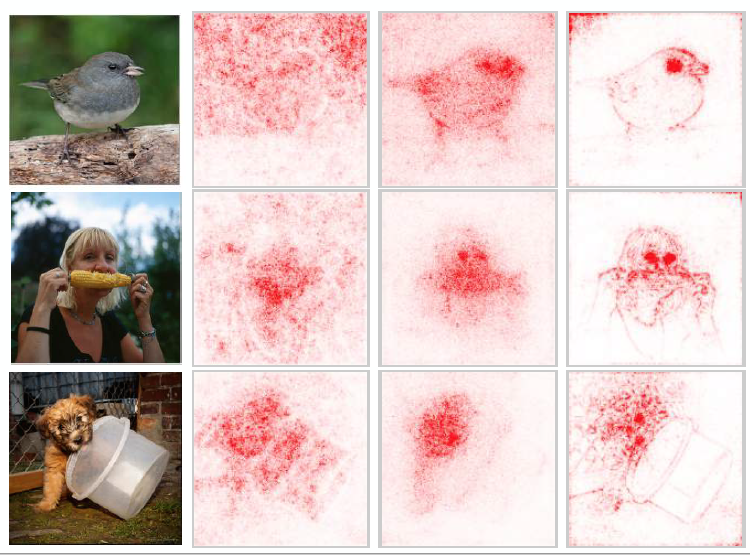

The terms “interpretability,” “explainability” and “black box” are tossed about a lot in the context of machine learning, but what do they really mean, and why do they matter?

By Medium -

2020-11-05

By Medium -

2020-11-05

Here is what you tell them.

By Machine Learning Mastery -

2020-09-01

By Machine Learning Mastery -

2020-09-01

AutoML refers to techniques for automatically discovering the best-performing model for a given dataset. When applied to neural networks, this involves both discovering the model architecture and the ...

By The Gradient -

2020-11-21

By The Gradient -

2020-11-21

A broad overview of the sub-field of machine learning interpretability; conceptual frameworks, existing research, and future directions.

By Medium -

2021-02-18

By Medium -

2021-02-18

In our last post we took a broad look at model observability and the role it serves in the machine learning workflow. In particular, we discussed the promise of model observability & model monitoring…

By KDnuggets -

2020-12-07

By KDnuggets -

2020-12-07

We outline some of the common pitfalls of machine learning for time series forecasting, with a look at time delayed predictions, autocorrelations, stationarity, accuracy metrics, and more.