By KDnuggets -

2020-11-20

By KDnuggets -

2020-11-20

Data science work typically requires a big lift near the end to increase the accuracy of any model developed. These five recommendations will help improve your machine learning models and help your pr ...

By Medium -

2020-12-21

By Medium -

2020-12-21

Over 70% of the world’s fresh water is used for irrigation and naturally, there is a huge demand for accurate estimates and metrics that could aid sensible water use in the agricultural sector…

By The Rasa Blog: Machine Learning Powered by Open Source -

2020-09-23

By The Rasa Blog: Machine Learning Powered by Open Source -

2020-09-23

SpaCy is an excellent tool for NLP, and Rasa has supported it from the start. You might already be aware of the spaCy components in the Rasa library. Rasa includes support for a spaCy tokenizer, featu ...

By Microsoft Research -

2021-01-19

By Microsoft Research -

2021-01-19

Microsoft and CMU researchers begin to unravel 3 mysteries in deep learning related to ensemble, knowledge distillation & self-distillation. Discover how their work leads to the first theoretical proo ...

By KDnuggets -

2021-03-14

By KDnuggets -

2021-03-14

Increasing accuracy in your models is often obtained through the first steps of data transformations. This guide explains the difference between the key feature scaling methods of standardization and ...

By Medium -

2020-07-25

By Medium -

2020-07-25

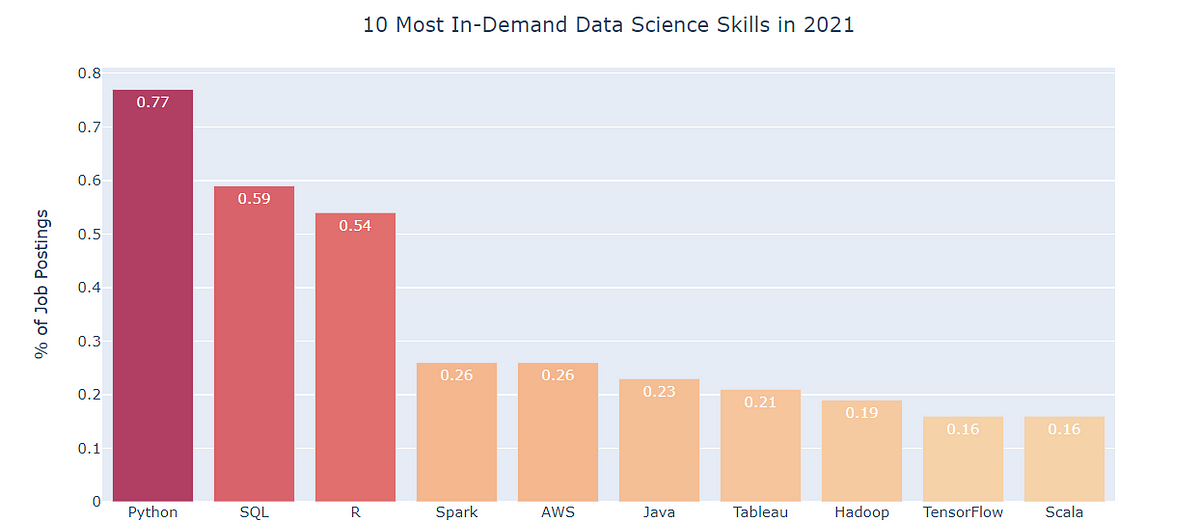

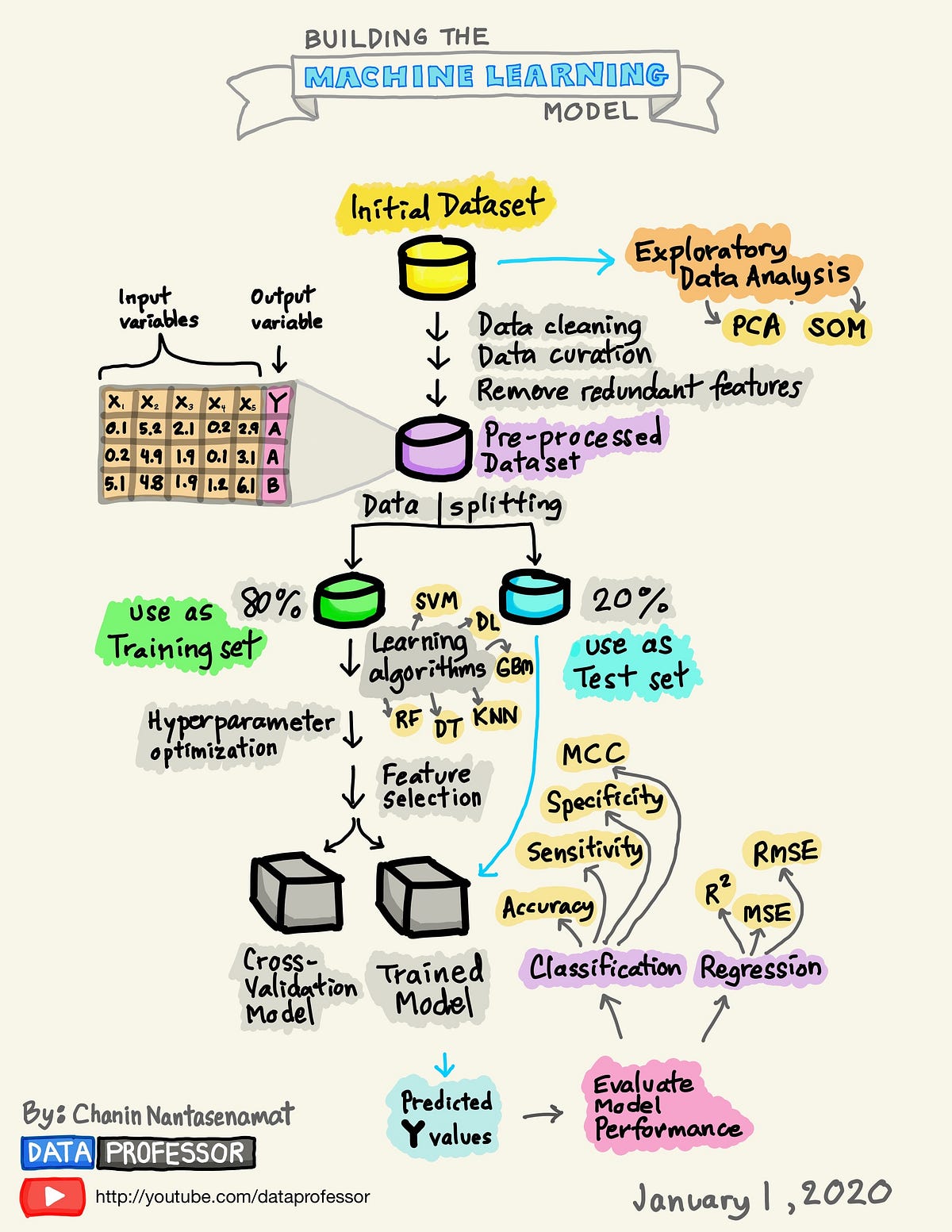

A Visual Guide to Learning Data Science