By Medium -

2021-02-18

By Medium -

2021-02-18

In our last post we took a broad look at model observability and the role it serves in the machine learning workflow. In particular, we discussed the promise of model observability & model monitoring…

By Medium -

2021-01-04

By Medium -

2021-01-04

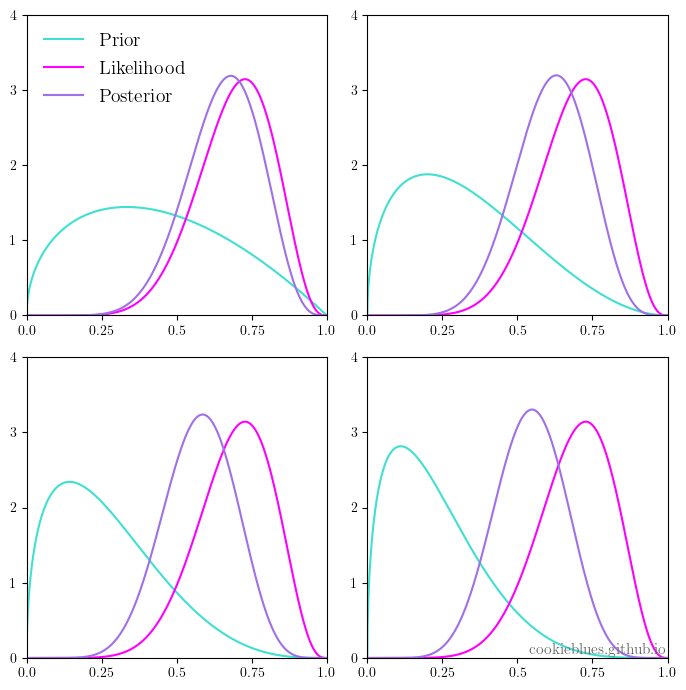

A groundbreaking and relatively new discovery upends classical statistics with relevant implications for data science practitioners and…

By huggingface -

2021-03-12

By huggingface -

2021-03-12

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

By Machine Learning Mastery -

2019-01-17

By Machine Learning Mastery -

2019-01-17

Batch normalization is a technique designed to automatically standardize the inputs to a layer in a deep learning neural network. Once implemented, batch normalization has the effect of dramatically a ...

By Medium -

2020-12-14

By Medium -

2020-12-14

How to deploy a trained sentiment analysis machine learning model to a REST API using Microsoft ML.NET and ASP.NET Core, in just 15 mins.

By Medium -

2020-12-03

By Medium -

2020-12-03

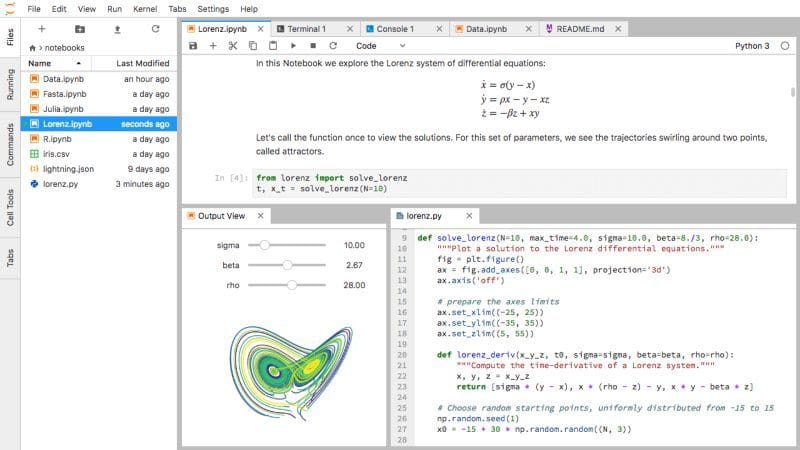

This tutorial covers the entire ML process, from data ingestion, pre-processing, model training, hyper-parameter fitting, predicting and storing the model for later use. We will complete all these…