By diginomica -

2020-09-24

By diginomica -

2020-09-24

How do you work backwards from thousands of disconnected PDFs to the 100,000 suspicious financial transactions they’re reporting on? A combination of hard human work, OCR, data analysis and graph.

By freeCodeCamp.org -

2021-01-12

By freeCodeCamp.org -

2021-01-12

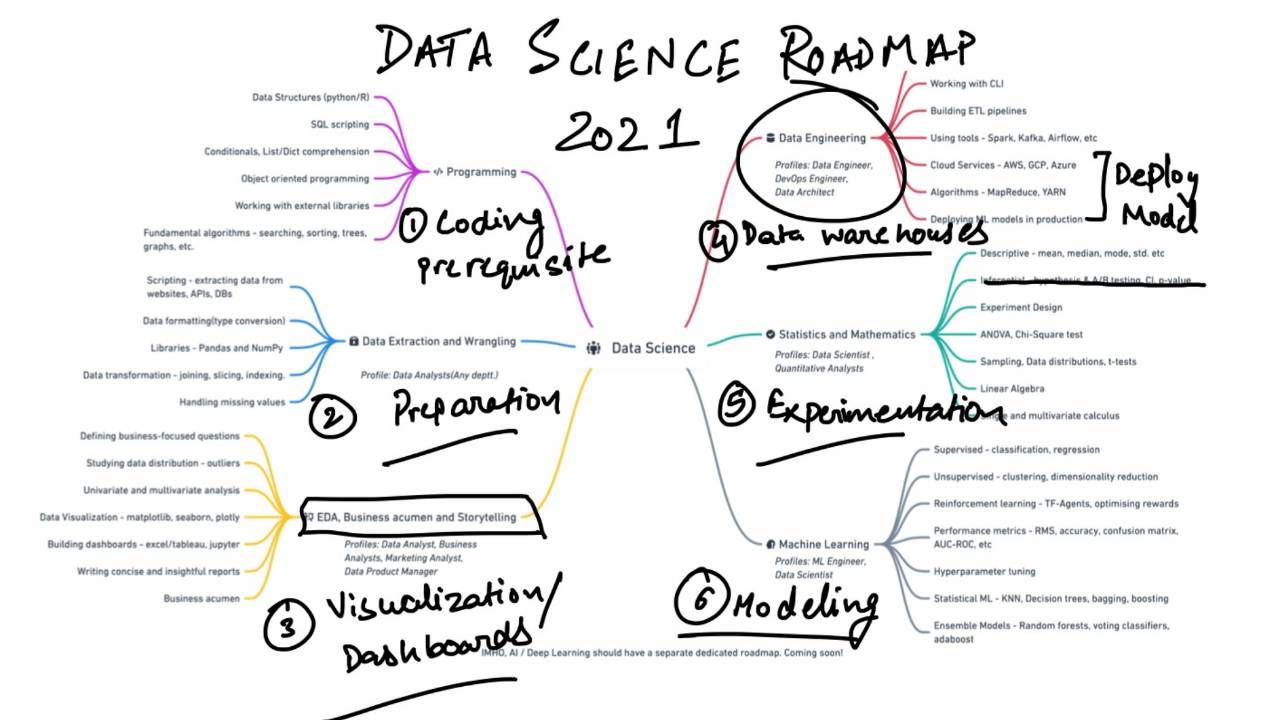

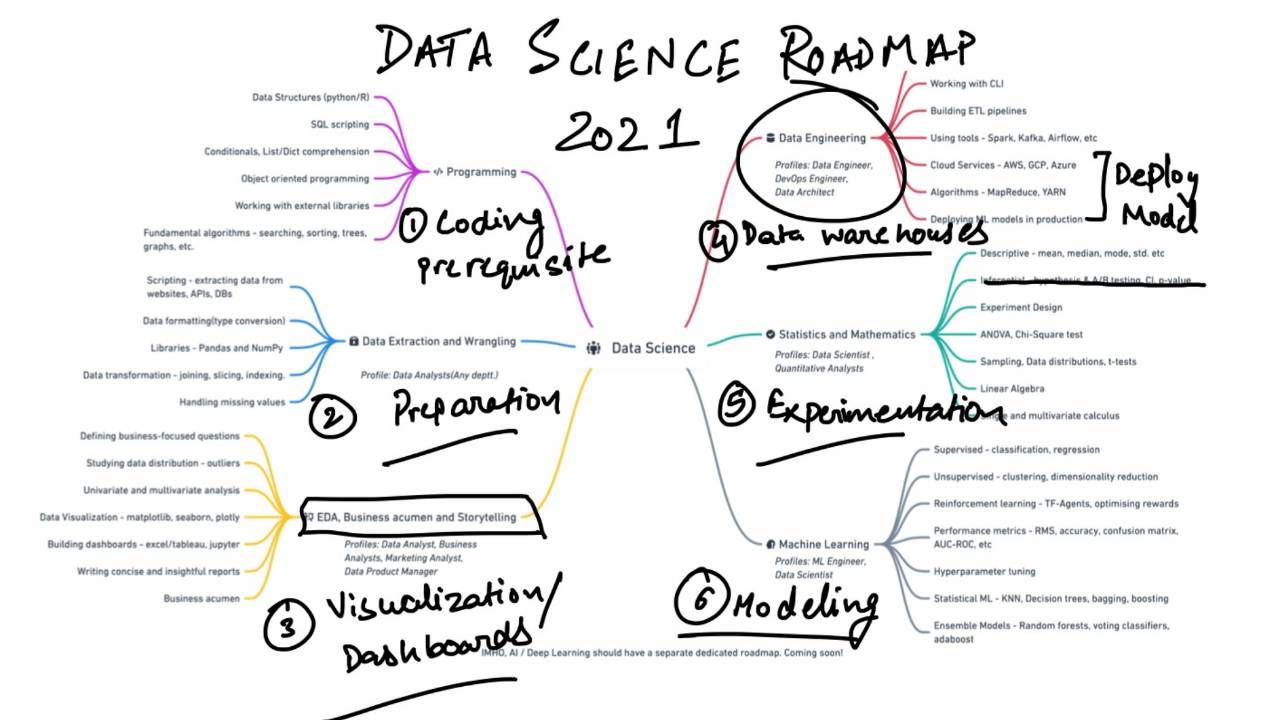

Although nothing really changes but the date, a new year fills everyone with the hope of starting things afresh. If you add in a bit of planning, some well-envisioned goals, and a learning roadmap, yo ...

By DEV Community -

2021-02-06

By DEV Community -

2021-02-06

Data is the new oil, and it's still true in 2021. However, to turn data into insights, we need to ana... Tagged with javascript, webdev, frontend, dataviz.

By DataCamp Community -

2021-02-05

By DataCamp Community -

2021-02-05

PYTHON for FINANCE introduces you to ALGORITHMIC TRADING, time-series data, and other common financial analyses!

By DAGsHub Blog -

2021-01-18

By DAGsHub Blog -

2021-01-18

Create, maintain, and contribute to a long-living dataset that will update itself automatically across projects, using git and DVC as versioning systems.

By Medium -

2020-12-01

By Medium -

2020-12-01

If I learned anything from working as a data engineer, it is that practically any data pipeline fails at some point. Broken connection, broken dependencies, data arriving too late, or some external…