By datasciencecentral -

2020-12-27

By datasciencecentral -

2020-12-27

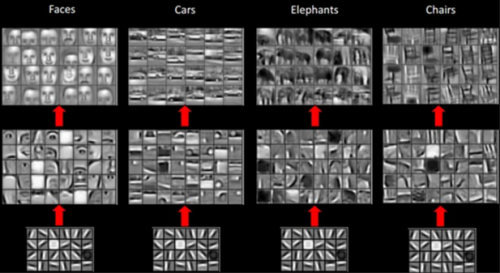

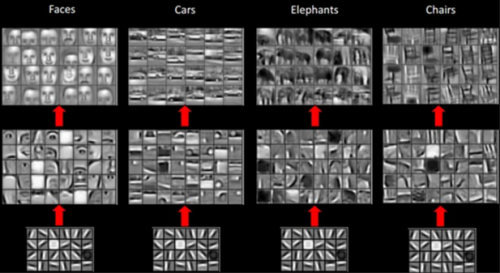

Deep Learning is a new area of Machine Learning research that has been gaining significant media interest owing to the role it is playing in artificial intel…

By Medium -

2020-12-23

By Medium -

2020-12-23

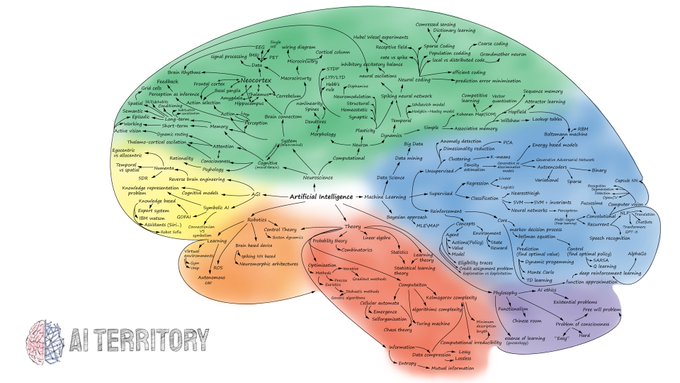

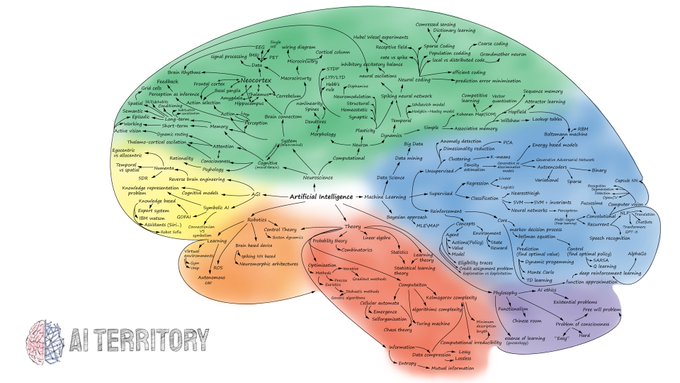

Semantic map of some major topics in artificial intelligence field. 200+ keywords. (End of 2020 version)

By Neural | The Next Web -

2021-03-02

By Neural | The Next Web -

2021-03-02

The core idea is deceptively simple: every observable phenomenon in the entire universe can be modeled by a neural network. And that means, by extension, the universe itself may be a neural network. V ...

By techxplore -

2020-10-14

By techxplore -

2020-10-14

Artificial intelligence has arrived in our everyday lives—from search engines to self-driving cars. This has to do with the enormous computing power that has become available in recent years. But new ...

By Microsoft Research -

2021-01-19

By Microsoft Research -

2021-01-19

Microsoft and CMU researchers begin to unravel 3 mysteries in deep learning related to ensemble, knowledge distillation & self-distillation. Discover how their work leads to the first theoretical proo ...

By Medium -

2020-10-08

By Medium -

2020-10-08

Modern state-of-the-art neural network architectures are HUGE. For instance, you have probably heard about GPT-3, OpenAI’s newest revolutionary NLP model, capable of writing poetry and interactive…